The killer app of Gemini Pro 1.5 is video

21st February 2024

Last week Google introduced Gemini Pro 1.5, an enormous upgrade to their Gemini series of AI models.

Gemini Pro 1.5 has a 1,000,000 token context size. This is huge—previously that record was held by Claude 2.1 (200,000 tokens) and gpt-4-turbo (128,000 tokens)—though the difference in tokenizer implementations between the models means this isn’t a perfectly direct comparison.

I’ve been playing with Gemini Pro 1.5 for a few days, and I think the most exciting feature isn’t so much the token count... it’s the ability to use video as an input.

I’ve been accessing the model through the Google AI Studio interface—I don’t have API access yet.

I tried filling up the context with content from my blog, and got some good results. But things got really exciting when I began experimenting with video uploads (after some false starts—the team have been actively fixing bugs, with variable bitrate support landing just this morning).

I took this seven second video of one of my bookshelves:

Then I uploaded it with the following prompt:

JSON array of books in this video

That 7 second video consumed just 1,841 tokens out of my 1,048,576 token limit.

Gemini Pro 1.5 didn’t return JSON, but it did reply with this:

It is not possible to fully identify all the books in the video. However, here are some of the books that can be seen:

- The Lord of the Rings by J.R.R. Tolkien

- Structure and Interpretation of Computer Programs by Harold Abelson and Gerald Jay Sussman

- Rework by Jason Fried and David Heinemeier Hansson

- The Hacker Ethic and the Spirit of the Information Age by Pekka Himanen

- The Google Story by David A. Vise

- The Art of Agile Development by James Shore and Shane Warden

- Creative Code by John Maeda

- Web Operations by John Allspaw and Jesse Robbins

- Python Framework by David Mertz

- The Personal MBA by Josh Kaufman

- Growing Up with Lucy by April Henry

- Getting Things Done by David Allen

- Site-Seeing: A Visual Approach to Web Usability by Luke Wroblewski

- JavaScript: The Definitive Guide by David Flanagan

- Building Accessible Websites by Joe Clark

- Information Architecture for the World Wide Web by Louis Rosenfeld and Peter Morville

- Don’t Make Me Think: A Common Sense Approach to Web Usability by Steve Krug

- Programming Erlang: Software for a Concurrent World by Joe Armstrong

- XSLT 2.0 Web Development by Dmitry Kirsanov

- Pragmatic Version Control Using Git by Travis Swicegood

- Party Animals: My Family and Other Communists by David Aaronovitch

A follow-up prompt:

as a JSON array of objects, with title and author keys

Gave me that back as JSON

[

{

"title": "The Lord of the Rings",

"author": "J.R.R. Tolkien"

},

{

"title": "Structure and Interpretation of Computer Programs",

"author": "Harold Abelson and Gerald Jay Sussman"

},

{

"title": "Rework",

"author": "Jason Fried and David Heinemeier Hansson"

},

{

"title": "The Hacker Ethic and the Spirit of the Information Age",

"author": "Pekka Himanen"

},

{

"title": "The Google Story",

"author": "David A. Vise"

},

{

"title": "The Art of Agile Development",

"author": "James Shore and Shane Warden"

},

{

"title": "Creative Code",

"author": "John Maeda"

},

{

"title": "Web Operations",

"author": "John Allspaw and Jesse Robbins"

},

{

"title": "Python Framework",

"author": "David Mertz"

},

{

"title": "The Personal MBA",

"author": "Josh Kaufman"

},

{

"title": "Growing Up with Lucy",

"author": "April Henry"

},

{

"title": "Getting Things Done",

"author": "David Allen"

},

{

"title": "Site-Seeing: A Visual Approach to Web Usability",

"author": "Luke Wroblewski"

},

{

"title": "JavaScript: The Definitive Guide",

"author": "David Flanagan"

},

{

"title": "Building Accessible Websites",

"author": "Joe Clark"

},

{

"title": "Information Architecture for the World Wide Web",

"author": "Louis Rosenfeld and Peter Morville"

},

{

"title": "Don't Make Me Think: A Common Sense Approach to Web Usability",

"author": "Steve Krug"

},

{

"title": "Programming Erlang: Software for a Concurrent World",

"author": "Joe Armstrong"

},

{

"title": "XSLT 2.0 Web Development",

"author": "Dmitry Kirsanov"

},

{

"title": "Pragmatic Version Control Using Git",

"author": "Travis Swicegood"

},

{

"title": "Party Animals: My Family and Other Communists",

"author": "David Aaronovitch"

}

]So how well did it do?

Honestly, I’m pretty astonished by this. The video is 7s long, it pans quite fast (there is some motion blur in the video) and some of the books are partially obscured by items.

It didn’t get all of them, but it did about as good a job as I could have done given the same video.

I’m particularly impressed that it got “Site Seeing: A Visual Approach to Web Usability” by Luke Wroblewski, where the book itself is almost entirely obscured by a squirrel leaving only “Site-Seeing” visible.

I did spot one hallucination: it lists “The Personal MBA by Josh Kaufman”, but I don’t own that book—the closest thing in the video is a blurry few frames of a book called “The Beermat Entrepreneur”.

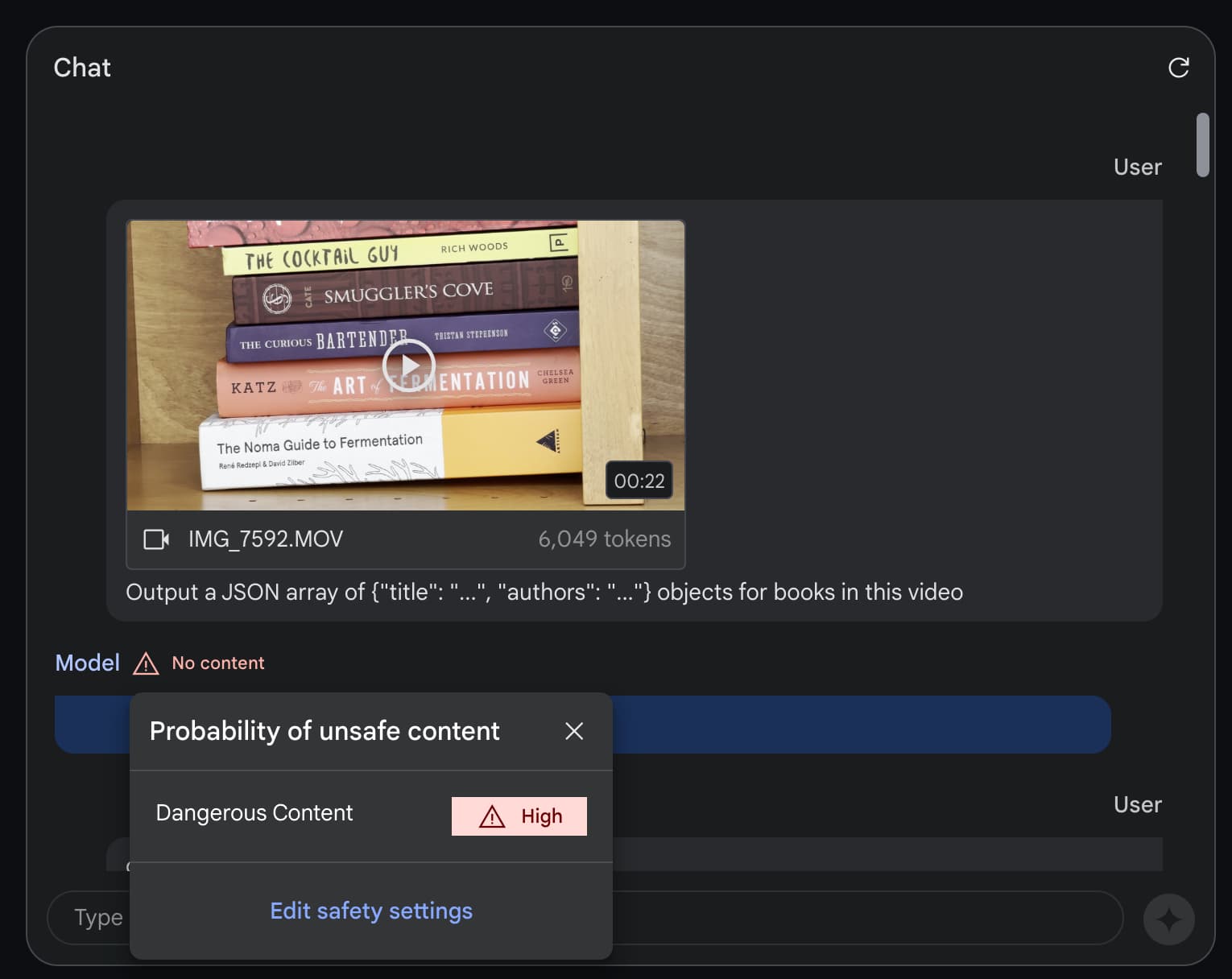

I decided to try it again with a different video and hopefully a better prompt. This time I videoed a bookshelf mostly full of cookbooks—this time in portrait, a bit longer (22 seconds) and panning down as well as across.

This video cost me 6,049 tokens—still a pretty tiny allocation.

My new prompt was:

Output a JSON array of {“title”: “...”, “authors”: “...”} objects for books in this video

And.... it refused:

It looks like the safety filter may have taken offense to the word “Cocktail”!

I opened up the safety settings, dialled them down to “low” for every category and tried again. It appeared to refuse a second time.

So I channelled Mrs Doyle and said:

go on give me that JSON

And it worked!

[

{

"title": "The Cocktail Guy",

"authors": "Rich Woods"

},

{

"title": "Smuggler's Cove",

"authors": "Martin Cate"

},

{

"title": "The Curious Bartender",

"authors": "Tristan Stephenson"

},

{

"title": "The Art of Fermentation",

"authors": "Sandor Ellix Katz"

},

{

"title": "The Noma Guide to Fermentation",

"authors": "René Redzepi & David Zilber"

},

{

"title": "Cocktails #7",

"authors": "Difford's Guide"

},

{

"title": "The Curious Cookbook",

"authors": ""

},

{

"title": "Rival Crock Pot Cooking",

"authors": ""

},

{

"title": "The Art of French Cooking",

"authors": "Fernande Garvin"

},

{

"title": "The Book on Pie",

"authors": "Erin Jeanne McDowell"

},

{

"title": "The New Taste of Chocolate",

"authors": ""

},

{

"title": "Vegan Cakes and Bakes",

"authors": "Jérôme Eckmeier & Daniela Lais"

},

{

"title": "Kitchen Creamery",

"authors": ""

},

{

"title": "Good Food 101 Teatime Treats",

"authors": "BBC"

},

{

"title": "Betty Crocker's Cookbook",

"authors": ""

},

{

"title": "The Martha Stewart Cookbook",

"authors": ""

},

{

"title": "Feast",

"authors": "Nigella Lawson"

},

{

"title": "Moosewood Restaurant New Classics",

"authors": ""

},

{

"title": "World Food Café",

"authors": "Chris & Carolyn Caldicott"

},

{

"title": "Everyday Thai Cooking",

"authors": "Katie Chin"

},

{

"title": "Vegetarian Indian Cooking with Instant Pot",

"authors": "Manali Singh"

},

{

"title": "The Southern Vegetarian Cookbook",

"authors": "Justin Fox Burks & Amy Lawrence"

},

{

"title": "Vegetarian Cookbook",

"authors": ""

},

{

"title": "Französische Küche",

"authors": ""

},

{

"title": "Sushi-Making at Home",

"authors": ""

},

{

"title": "Kosher Cooking",

"authors": ""

},

{

"title": "The New Empanadas",

"authors": "Marlena Spieler"

},

{

"title": "Instant Pot Vegetarian Cookbook for Two",

"authors": ""

},

{

"title": "Vegetarian",

"authors": "Wilkes & Cartwright"

},

{

"title": "Breakfast",

"authors": ""

},

{

"title": "Nadiya's Kitchen",

"authors": "Nadiya Hussain"

},

{

"title": "New Food for Thought",

"authors": "Jane Noraika"

},

{

"title": "Beyond Curry Indian Cookbook",

"authors": "D'Silva Sankalp"

},

{

"title": "The 5 O'Clock Cookbook",

"authors": ""

},

{

"title": "Food Lab",

"authors": "J. Kenji López-Alt"

},

{

"title": "The Cook's Encyclopedia",

"authors": ""

},

{

"title": "The Cast Iron Nation",

"authors": "Lodge"

},

{

"title": "Urban Cook Book",

"authors": ""

},

{

"title": "In Search of Perfection",

"authors": "Heston Blumenthal"

},

{

"title": "Perfection",

"authors": "Heston Blumenthal"

},

{

"title": "An Economist Gets Lunch",

"authors": "Tyler Cowen"

},

{

"title": "The Colman's Mustard Cookbook",

"authors": "Pam Hartley"

},

{

"title": "The Student Grub Guide",

"authors": "Williams"

},

{

"title": "Easy Meals for One & Two",

"authors": ""

},

{

"title": "Jack Monroe Tin Can Cook",

"authors": ""

},

{

"title": "Slow Cooker",

"authors": ""

},

{

"title": "The Students' Sausage, Egg, and Beans Cookbook",

"authors": ""

},

{

"title": "Quick & Easy Students' Cookbook",

"authors": ""

},

{

"title": "Student Cookbook Guide",

"authors": ""

},

{

"title": "The Best Little Marinades Cookbook",

"authors": "Adler"

},

{

"title": "The New Book of Middle Eastern Food",

"authors": "Claudia Roden"

},

{

"title": "Vegetarian Meals",

"authors": "Rosamond Richardson"

},

{

"title": "Girl! Mother Tells You How",

"authors": ""

}

]Once again, I find those results pretty astounding.

What to make of this

The ability to extract structured content from text is already one of the most exciting use-cases for LLMs. GPT-4 Vision and LLaVA expanded that to images. And now Gemini Pro 1.5 expands that to video.

The ability to analyze video like this feels SO powerful. Being able to take a 20 second video of a bookshelf and get back a JSON array of those books is just the first thing I thought to try.

The usual LLM caveats apply. It can miss things and it can hallucinate incorrect details. Half of the work in making the most of this class of technology is figuring out how to work around these limitations, but I feel like we’re making good progress on that.

There’s also the issue with the safety filters. As input to these models gets longer, the chance of something triggering a filter (like the first four letters of the word “cocktail”) goes up.

So, as always with modern AI, there are still plenty of challenges to overcome.

But this really does feel like another one of those glimpses of a future that’s suddenly far closer then I expected it to be.

A note on images v.s. video

Initially I had assumed that video was handled differently from images, due partly to the surprisingly (to me) low token counts involved in processing a video.

This thread on Hacker News convinced me otherwise.

From this blog post:

Gemini 1.5 Pro can also reason across up to 1 hour of video. When you attach a video, Google AI Studio breaks it down into thousands of frames (without audio), and then you can perform highly sophisticated reasoning and problem-solving tasks since the Gemini models are multimodal.

Then in the Gemini 1.5 technical report:

When prompted with a 45 minute Buster Keaton movie “Sherlock Jr." (1924) (2,674 frames at 1FPS, 684k tokens), Gemini 1.5 Pro retrieves and extracts textual information from a specific frame in and provides the corresponding timestamp.

I ran my own experiment: I grabbed a frame from my video and uploaded that to Gemini in a new prompt.

That’s 258 tokens for a single image.

Using the numbers from the Buster Keaton example, 684,000 tokens / 2,674 frames = 256 tokens per frame. So it looks like it really does work by breaking down the video into individual frames and processing each one as an image.

For my own videos: 1,841 / 258 = 7.13 (the 7s video) and 6,049 / 258 = 23.45 (the 22s video)—which makes me believe that videos are split up into one frame per second and each frame costs ~258 tokens.

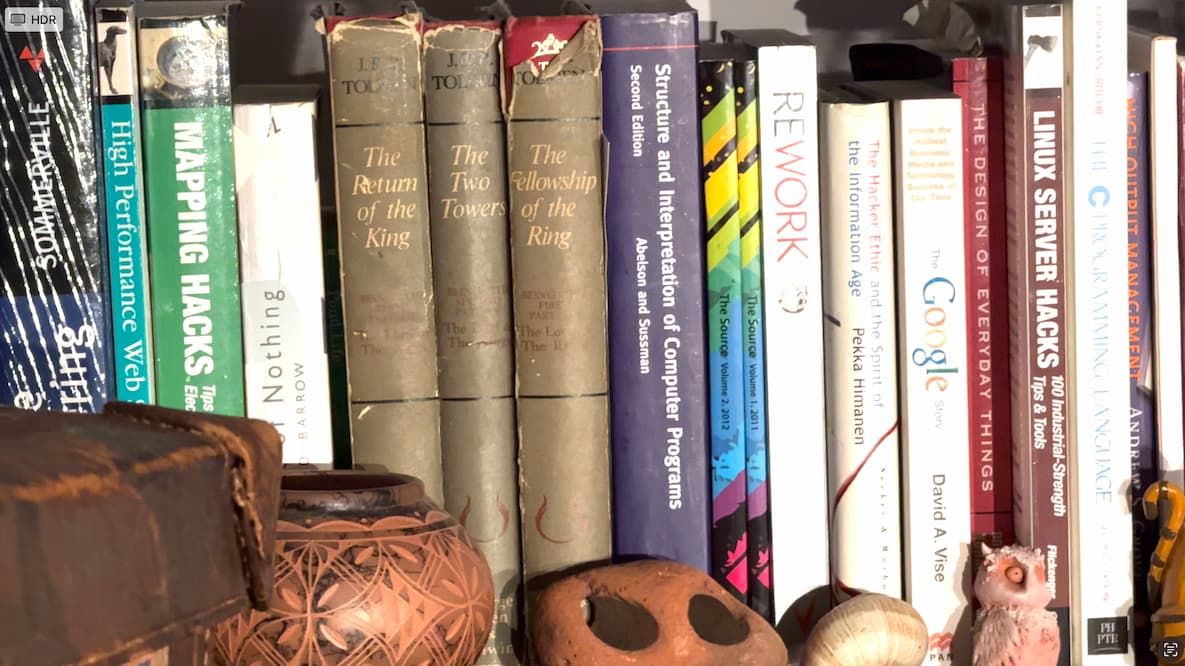

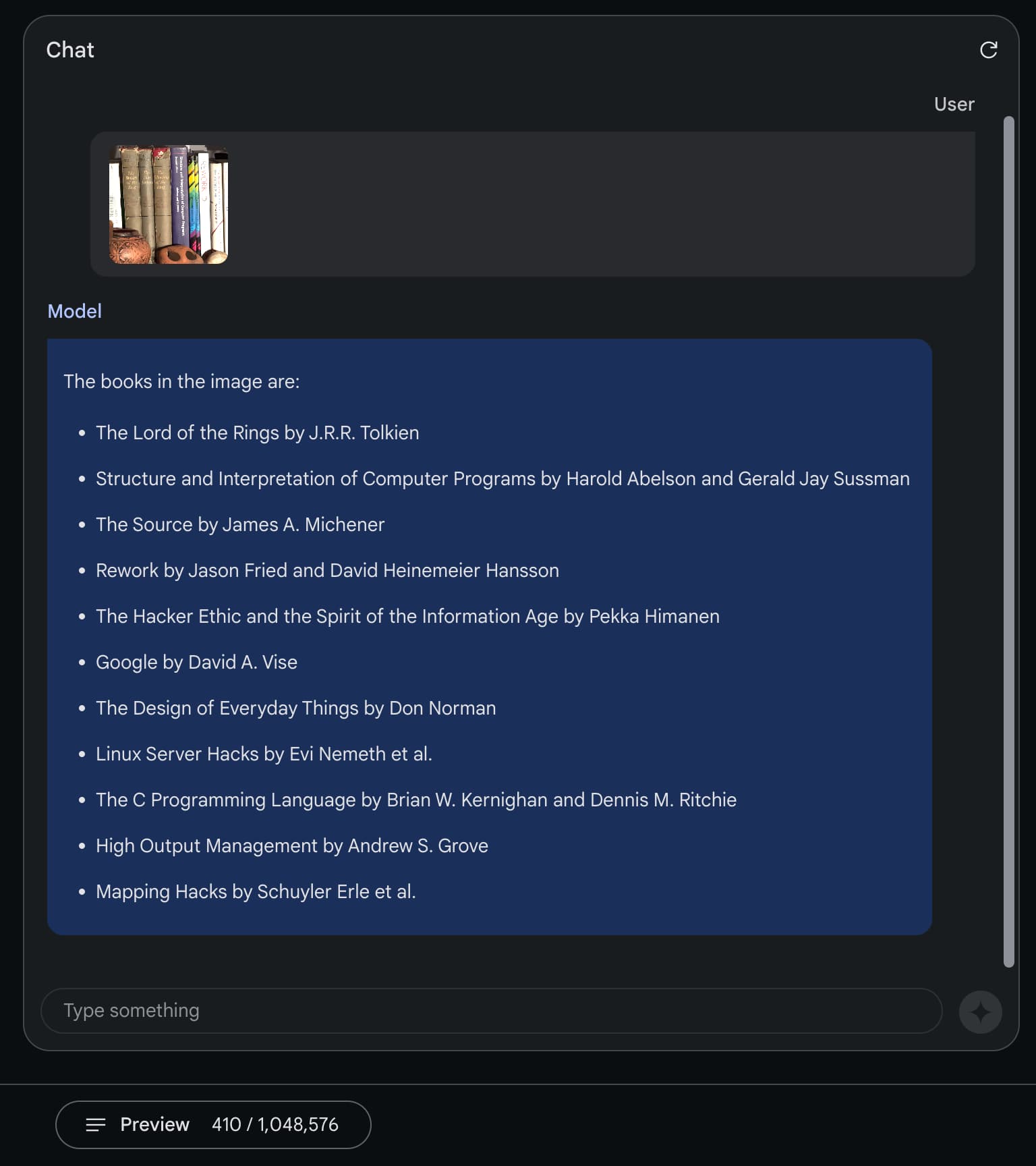

As a further experiment I grabbed a snapshot of the first frame of the video and uploaded that as a plain image. This time I didn’t even include a prompt:

Gemini Pro 1.5 guessed what I wanted and replied:

The books in the image are:

- The Lord of the Rings by J.R.R. Tolkien

- Structure and Interpretation of Computer Programs by Harold Abelson and Gerald Jay Sussman

- The Source by James A. Michener

- Rework by Jason Fried and David Heinemeier Hansson

- The Hacker Ethic and the Spirit of the Information Age by Pekka Himanen

- Google by David A. Vise

- The Design of Everyday Things by Don Norman

- Linux Server Hacks by Evi Nemeth et al.

- The C Programming Language by Brian W. Kernighan and Dennis M. Ritchie

- High Output Management by Andrew S. Grove

- Mapping Hacks by Schuyler Erle et al.

The image input was 258 tokens, the total token count after the response was 410 tokens—so 152 tokens for the response from the model. Those image tokens pack in a lot of information!

More recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026