Why I invented “dash encoding”, a new encoding scheme for URL paths

5th March 2022

Datasette now includes its own custom string encoding scheme, which I’ve called dash encoding. I really didn’t want to have to invent something new here, but unfortunately I think this is the best solution to my very particular problem. Some notes on how dash encoding works and why I created it.

Update 18th March 2022: This turned out not to be the right idea for my project after all! I ended up settling on a Tilde encoding scheme instead.

Table names and rows in URLs

I’ve put a lot of thought into the design of Datasette’s URLs.

Datasette exposes relational databases tables, as both web pages and a JSON API.

Consider a database in a SQLite file called legislators.db, containing a table called legislator_terms (example from this tutorial). The URL path to the web interface for that table will be:

And the JSON API will be here:

(Worth noting that Datasette supports other formats here too—CSV by default, and plugins can add more formats such as GeoJSON or Atom or iCal.)

Datasette also provides pages (and APIs) for individual rows, identified by their primary key:

For tables with compound primary keys, these pages can include the primary key values separated by commas:

This is all pretty straightforward so far. But now we get to the challenge: what if a table’s name or a row’s primary key contains a forward slash or a period character?

This could break the URL scheme!

SQLite table names are allowed to contain almost any character, and Datasette is designed to work with any existing SQLite database—so I can’t guarantee that a table with one of those characters won’t need to be handled.

Consider a database with two tables—one called legislator_terms and another called legislator_terms/1—given the URL /legislators/legislator_terms/1 it’s no longer clear if it refers to the table with that name or the row with primary key 1 in the other table!

A similar problem exists for table names with as legislators.csv—which end in a format. Or primary key string values that end in .json.

Why URL encoding doesn’t work here

Up until now, Datasette has solved this problem using URL percent encoding. This provides a standard mechanism for encoding “special” characters in URLs.

legislator_terms/1 encodes to legislator_terms%2F1

This should be enough to solve the problem. The URL to that weirdly named table can now be:

/legislators/legislator_terms%2F1

When routing the URL, the application can take this into account and identify that this it a table named legislator_terms/1, as opposed to a request for the row with ID 1 in the legislator_terms table.

There are two remaining problems.

Firstly, the “.” character is ignored by URL encoding, so we still can’t tell the difference between /db/table.json and a table called table.json. I worked around this issue in Datasette by supporting an optional alternative ?_format=json parameter, but it’s messy and confusing.

Much more seriously, it turns out there are numerous common pieces of web infrastructure that “helpfully” decode escaped characters in URLs before passing them on to the underlying web application!

I first encountered this in the ASGI standard itself, which decoded characters in the path field before they were passed to the rest of the application.I submitted a PR adding raw_path to ASGI precisely to work around this problem for Datasette.

Over time though, the problem kept cropping up. Datasette aims to run on as many hosting platforms as possible. I’ve seen URL escaping applied at a higher level enough times now to be very suspicious of any load balancer or proxy or other web server mechanism that might end up executing between Datasette and the rest of the web.

Update: Flask core maintainer David Lord confirms on Twitter that this is a long-standing known problem:

This behavior in Apache/nginx/etc is why WSGI/ASGI can’t specify “literal URL the user typed in”, because anything in front of the app might modify slashes or anything else. So all the spec can provide is “decoded URL”.

So, I need a way of encoding a table name that might include / and . characters in a way that will survive some other layer of the stack decoding URL encoded strings in the URL path before Datasette gets to see them!

Introducing dash encoding

That’s where dash encoding comes in. I tried to design the fastest, simplest encoding mechanism I could that would solve this very specific problem.

Loose requirements:

- Reversible—it’s crucial to at any possible value survives a round-trip through the encoding

- Avoid changing the string at all if possible. Otherwise I could use something like base64, but I wanted to keep the name in the URL as close to readable as possible

- Survive interference by proxies and load balancer that might try to be helpful

- Fast to apply the transformation

- As simple as possible

- Easy to implement, including in languages other than Python

Dash encoding consists of three simple steps:

- Replace all single hyphen characters

-with two hyphens-- - Replace any forward slash

/character with hyphen forward slash-/ - Replace any period character

.with hyphen period-.

To reverse the encoding, run those steps backwards.

Here the Python implementation of this encoding scheme:

def dash_encode(s: str) -> str: "Returns dash-encoded string - for example ``/foo/bar`` -> ``-/foo-/bar``" return s.replace("-", "--").replace(".", "-.").replace("/", "-/") def dash_decode(s: str) -> str: "Decodes a dash-encoded string, so ``-/foo-/bar`` -> ``/foo/bar``" return s.replace("-/", "/").replace("-.", ".").replace("--", "-")

And the pytest tests for it:

@pytest.mark.parametrize( "original,expected", ( ("abc", "abc"), ("/foo/bar", "-/foo-/bar"), ("/-/bar", "-/---/bar"), ("-/db-/table---.csv-.csv", "---/db---/table-------.csv---.csv"), ), ) def test_dash_encoding(original, expected): actual = utils.dash_encode(original) assert actual == expected # And test round-trip assert original == utils.dash_decode(actual)

Here’s the full commit.

This meets my requirements.

Capturing these with a regular expression

There was one remaining challenge. Datasette uses regular expressions—inspired by Django—to route requests to the correct page.

I wanted to use a regular expression to extract out dash encoded values, that could also distinguish them from / and - and . characters that were not encoded in that way.

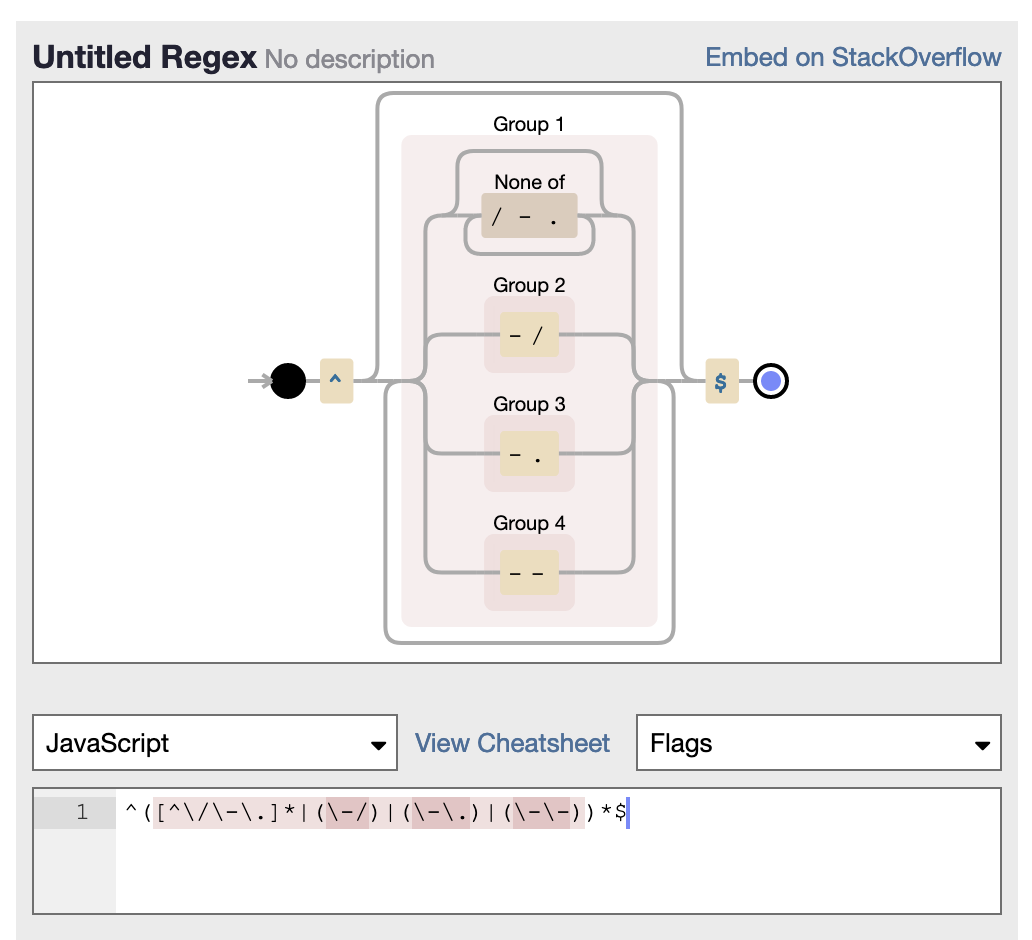

Here’s the pattern I came up with for strings matching this pattern:

([^\/\-\.]*|(\-/)|(\-\.)|(\-\-))*

Broken down:

-

[^\/\-\.]*means 0 or more characters that are NOT one of.or/or-—since we don’t care about those characters at all -

(\-/)means the explicit sequence-/ -

(\-\.)means the explicit sequence-. -

(\-\-)means the explicit sequence-- - Those four are wrapped in a group combined with the

|or operator - The group is then wrapped in a

(..)*—specifying that it can repeat as many times as you like

A better way to break down this regular expression is visually, using Debuggex:

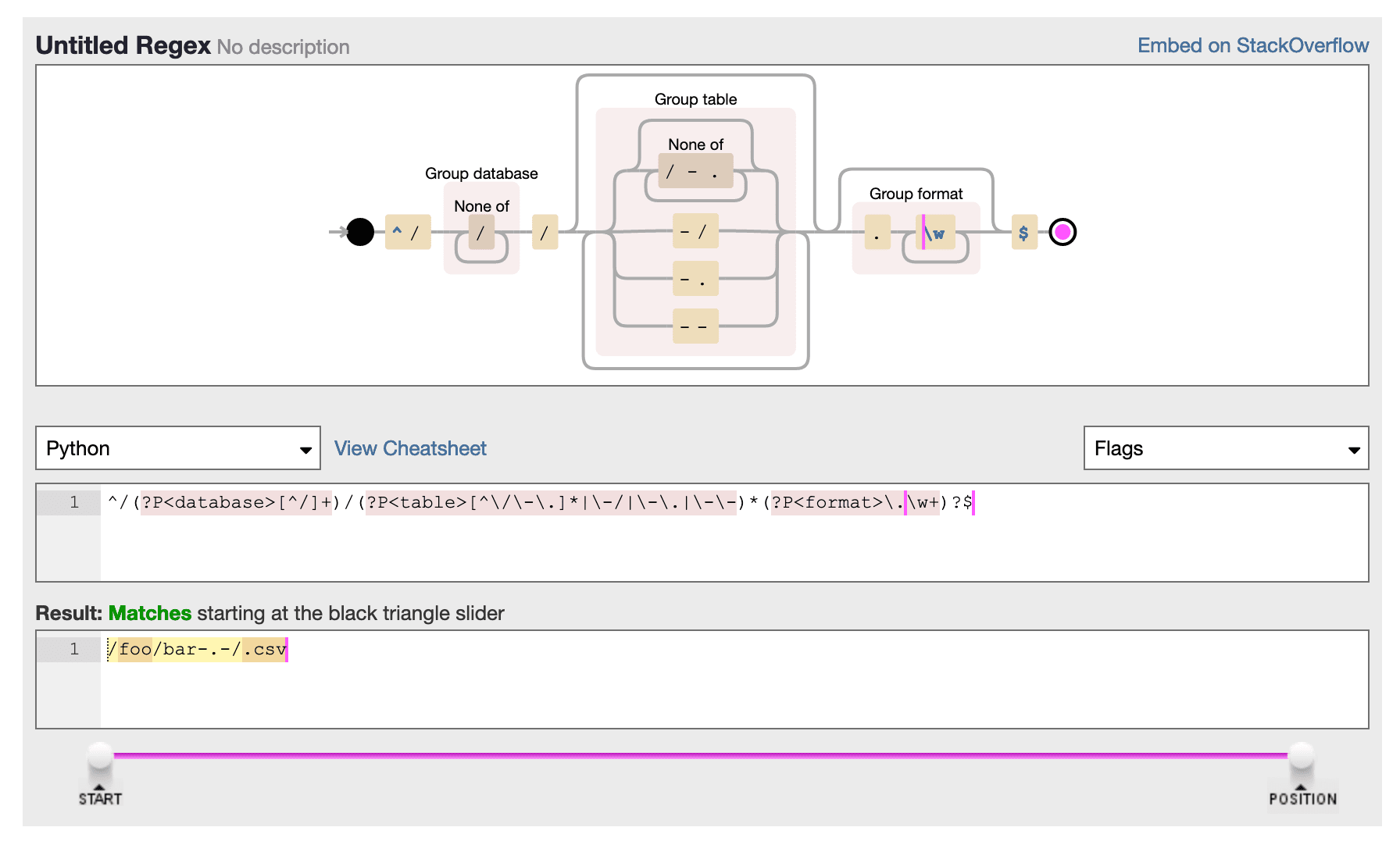

Combining this into the full regular expression that matches a /database/table.format path is even messier, due to the need to add non-capturing group syntax (?:..) and named groups (?P<name>...)—it ends up looking like this:

^/(?P<database>[^/]+)/(?P<table>(?:[^\/\-\.]*|(?:\-/)*|(?:\-\.)*|(?:\-\-)*)*?)\.(?P<format>\w+)?$

Visualized with Debuggex:

Update: Thanks to suggestions from Matthew Somerville I simplified this further to:

^/(?P<database>[^/]+)/(?P<table>[^\/\-\.]*|\-/|\-\.|\-\-)*(?P<format>\.\w+)?$

Next steps: implementation

I’m currently working on integrating it into Datasette in this PR. The full history of my thinking around this problem can be found in issue 1439, with comments stretching back to August last year!

More recent articles

- Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode - 11th December 2024

- ChatGPT Canvas can make API requests now, but it's complicated - 10th December 2024

- I can now run a GPT-4 class model on my laptop - 9th December 2024