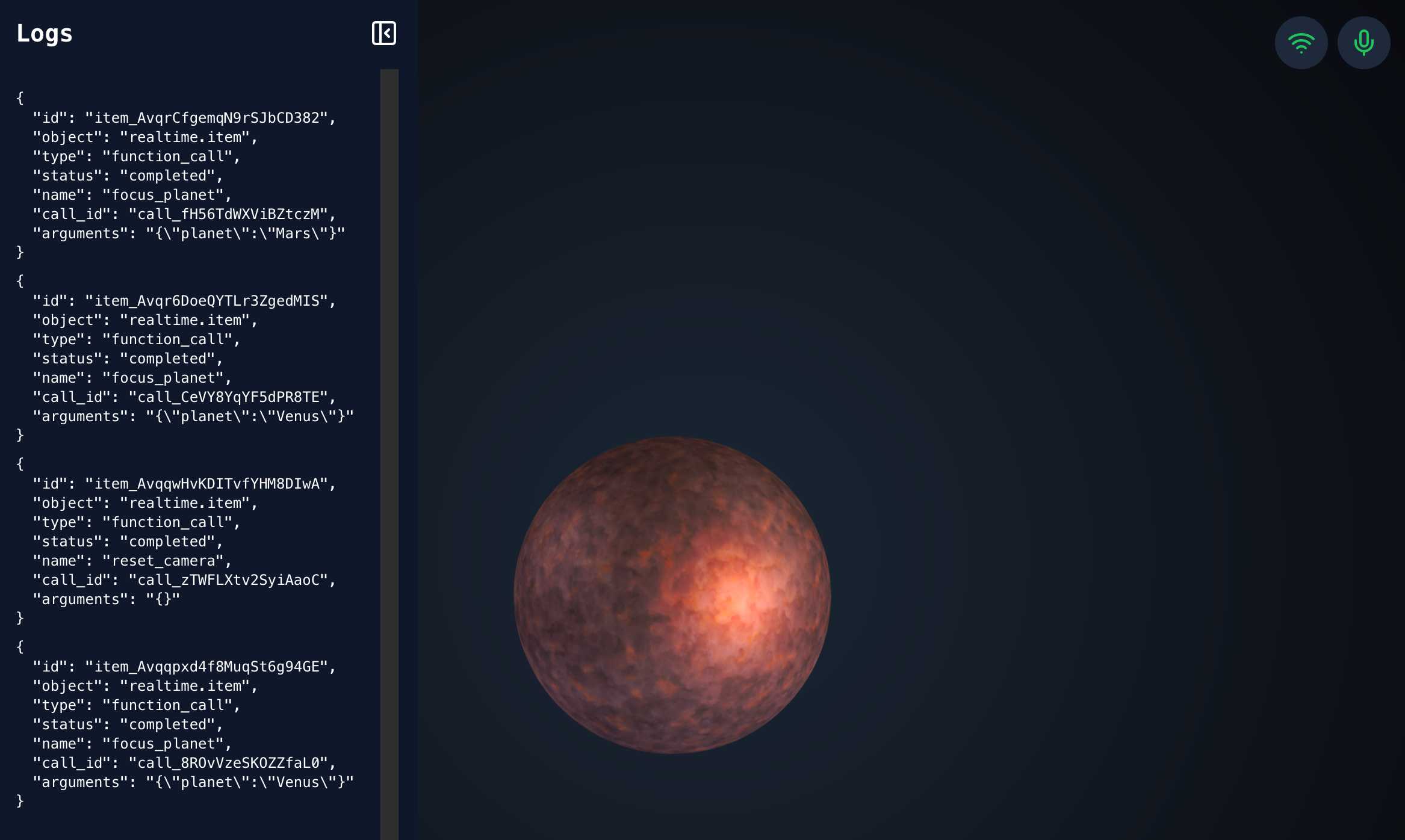

openai-realtime-solar-system. This was my favourite demo from OpenAI DevDay back in October - a voice-driven exploration of the solar system, developed by Katia Gil Guzman, where you could say things out loud like "show me Mars" and it would zoom around showing you different planetary bodies.

OpenAI finally released the code for it, now upgraded to use the new, easier to use WebRTC API they released in December.

I ran it like this, loading my OpenAI API key using llm keys get:

cd /tmp

git clone https://github.com/openai/openai-realtime-solar-system

cd openai-realtime-solar-system

npm install

OPENAI_API_KEY="$(llm keys get openai)" npm run dev

You need to click on both the Wifi icon and the microphone icon before you can instruct it with your voice. Try "Show me Mars".