27th September 2025

Video models are zero-shot learners and reasoners. Fascinating new paper from Google DeepMind which makes a very convincing case that their Veo 3 model - and generative video models in general - serve a similar role in the machine learning visual ecosystem as LLMs do for text.

LLMs took the ability to predict the next token and turned it into general purpose foundation models for all manner of tasks that used to be handled by dedicated models - summarization, translation, parts of speech tagging etc can now all be handled by single huge models, which are getting both more powerful and cheaper as time progresses.

Generative video models like Veo 3 may well serve the same role for vision and image reasoning tasks.

From the paper:

We believe that video models will become unifying, general-purpose foundation models for machine vision just like large language models (LLMs) have become foundation models for natural language processing (NLP). [...]

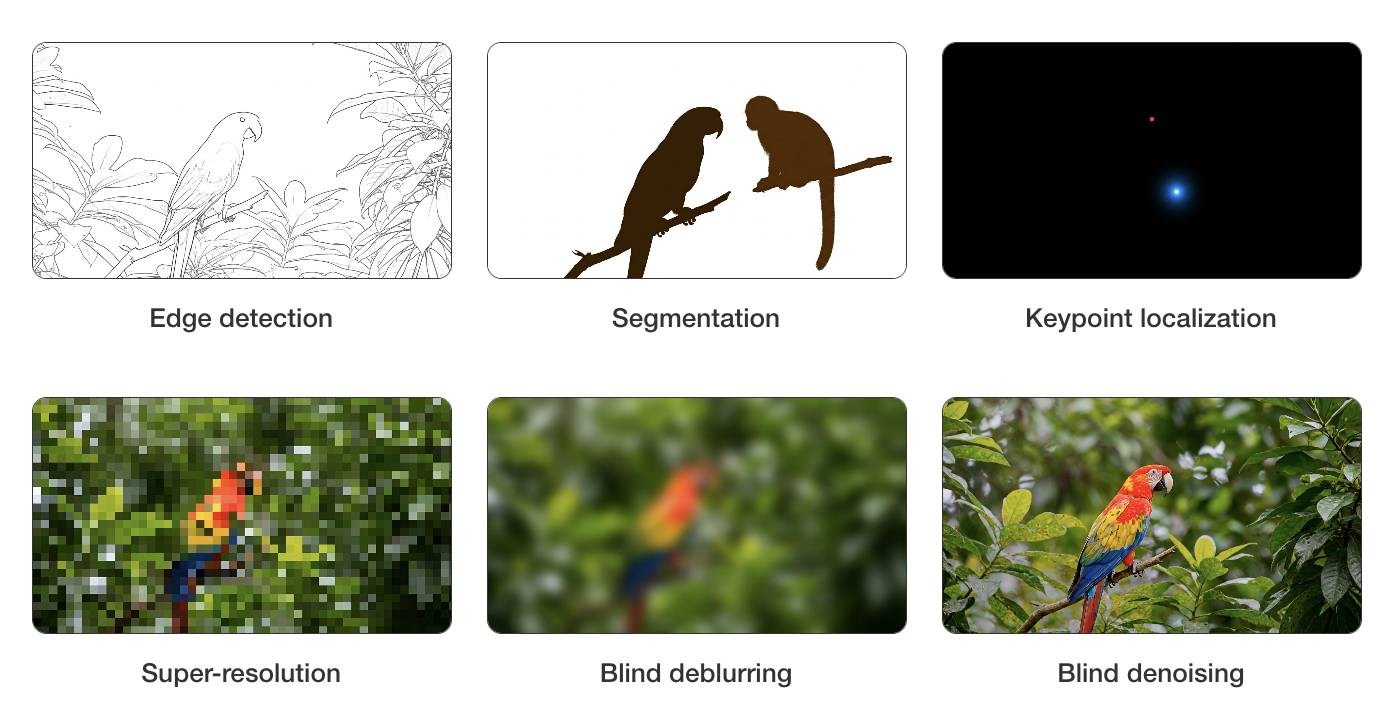

Machine vision today in many ways resembles the state of NLP a few years ago: There are excellent task-specific models like “Segment Anything” for segmentation or YOLO variants for object detection. While attempts to unify some vision tasks exist, no existing model can solve any problem just by prompting. However, the exact same primitives that enabled zero-shot learning in NLP also apply to today’s generative video models—large-scale training with a generative objective (text/video continuation) on web-scale data. [...]

- Analyzing 18,384 generated videos across 62 qualitative and 7 quantitative tasks, we report that Veo 3 can solve a wide range of tasks that it was neither trained nor adapted for.

- Based on its ability to perceive, model, and manipulate the visual world, Veo 3 shows early forms of “chain-of-frames (CoF)” visual reasoning like maze and symmetry solving.

- While task-specific bespoke models still outperform a zero-shot video model, we observe a substantial and consistent performance improvement from Veo 2 to Veo 3, indicating a rapid advancement in the capabilities of video models.

I particularly enjoyed the way they coined the new term chain-of-frames to reflect chain-of-thought in LLMs. A chain-of-frames is how a video generation model can "reason" about the visual world:

Perception, modeling, and manipulation all integrate to tackle visual reasoning. While language models manipulate human-invented symbols, video models can apply changes across the dimensions of the real world: time and space. Since these changes are applied frame-by-frame in a generated video, this parallels chain-of-thought in LLMs and could therefore be called chain-of-frames, or CoF for short. In the language domain, chain-of-thought enabled models to tackle reasoning problems. Similarly, chain-of-frames (a.k.a. video generation) might enable video models to solve challenging visual problems that require step-by-step reasoning across time and space.

They note that, while video models remain expensive to run today, it's likely they will follow a similar pricing trajectory as LLMs. I've been tracking this for a few years now and it really is a huge difference - a 1,200x drop in price between GPT-3 in 2022 ($60/million tokens) and GPT-5-Nano today ($0.05/million tokens).

The PDF is 45 pages long but the main paper is just the first 9.5 pages - the rest is mostly appendices. Reading those first 10 pages will give you the full details of their argument.

The accompanying website has dozens of video demos which are worth spending some time with to get a feel for the different applications of the Veo 3 model.

It's worth skimming through the appendixes in the paper as well to see examples of some of the prompts they used. They compare some of the exercises against equivalent attempts using Google's Nano Banana image generation model.

For edge detection, for example:

Veo: All edges in this image become more salient by transforming into black outlines. Then, all objects fade away, with just the edges remaining on a white background. Static camera perspective, no zoom or pan.

Nano Banana: Outline all edges in the image in black, make everything else white.

Recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026