22nd July 2025 - Link Blog

Qwen/Qwen3-235B-A22B-Instruct-2507. Significant new model release from Qwen, published yesterday without much fanfare. (Update: probably because they were cooking the much larger Qwen3-Coder-480B-A35B-Instruct which they released just now.)

This is a follow-up to their April release of the full Qwen 3 model family, which included a Qwen3-235B-A22B model which could handle both reasoning and non-reasoning prompts (via a /no_think toggle).

The new Qwen3-235B-A22B-Instruct-2507 ditches that mechanism - this is exclusively a non-reasoning model. It looks like Qwen have new reasoning models in the pipeline.

This new model is Apache 2 licensed and comes in two official sizes: a BF16 model (437.91GB of files on Hugging Face) and an FP8 variant (220.20GB). VentureBeat estimate that the large model needs 88GB of VRAM while the smaller one should run in ~30GB.

The benchmarks on these new models look very promising. Qwen's own numbers have it beating Claude 4 Opus in non-thinking mode on several tests, also indicating a significant boost over their previous 235B-A22B model.

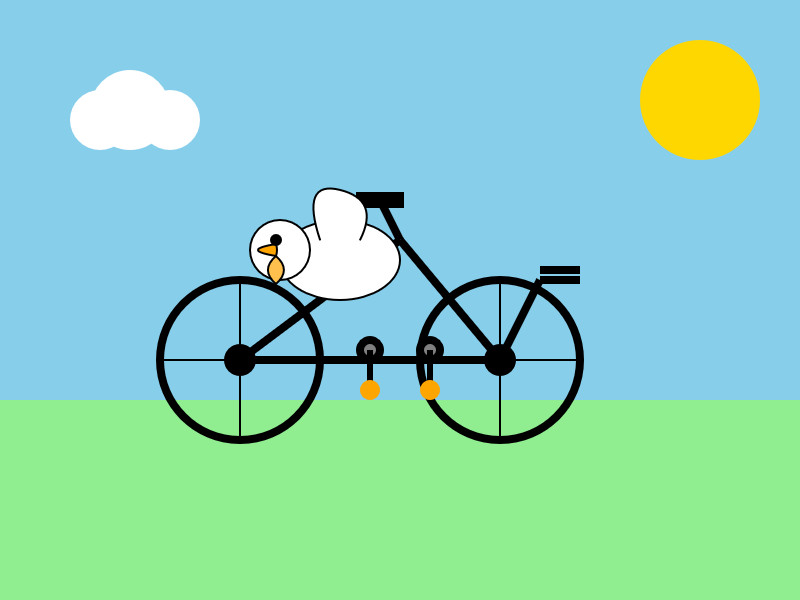

I haven't seen any independent benchmark results yet. Here's what I got for "Generate an SVG of a pelican riding a bicycle", which I ran using the qwen3-235b-a22b-07-25:free on OpenRouter:

llm install llm-openrouter

llm -m openrouter/qwen/qwen3-235b-a22b-07-25:free \

"Generate an SVG of a pelican riding a bicycle"

Recent articles

- Can coding agents relicense open source through a “clean room” implementation of code? - 5th March 2026

- Something is afoot in the land of Qwen - 4th March 2026

- I vibe coded my dream macOS presentation app - 25th February 2026