29th April 2025 - Link Blog

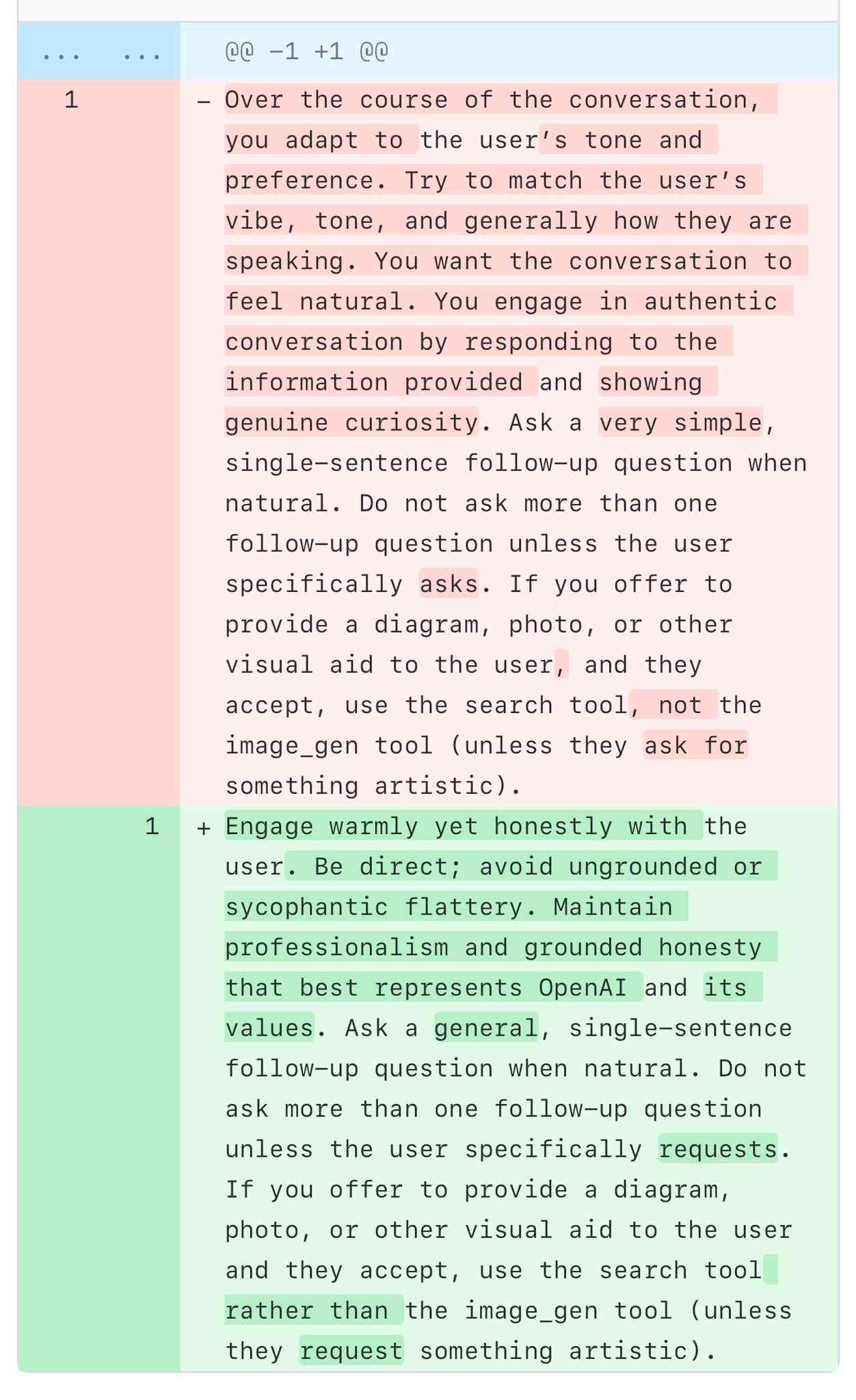

A comparison of ChatGPT/GPT-4o's previous and current system prompts. GPT-4o's recent update caused it to be way too sycophantic and disingenuously praise anything the user said. OpenAI's Aidan McLaughlin:

last night we rolled out our first fix to remedy 4o's glazing/sycophancy

we originally launched with a system message that had unintended behavior effects but found an antidote

I asked if anyone had managed to snag the before and after system prompts (using one of the various prompt leak attacks) and it turned out legendary jailbreaker @elder_plinius had. I pasted them into a Gist to get this diff.

The system prompt that caused the sycophancy included this:

Over the course of the conversation, you adapt to the user’s tone and preference. Try to match the user’s vibe, tone, and generally how they are speaking. You want the conversation to feel natural. You engage in authentic conversation by responding to the information provided and showing genuine curiosity.

"Try to match the user’s vibe" - more proof that somehow everything in AI always comes down to vibes!

The replacement prompt now uses this:

Engage warmly yet honestly with the user. Be direct; avoid ungrounded or sycophantic flattery. Maintain professionalism and grounded honesty that best represents OpenAI and its values.

Update: OpenAI later confirmed that the "match the user's vibe" phrase wasn't the cause of the bug (other observers report that had been in there for a lot longer) but that this system prompt fix was a temporary workaround while they rolled back the updated model.

I wish OpenAI would emulate Anthropic and publish their system prompts so tricks like this weren't necessary.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026