Watching o3 guess a photo’s location is surreal, dystopian and wildly entertaining

26th April 2025

Watching OpenAI’s new o3 model guess where a photo was taken is one of those moments where decades of science fiction suddenly come to life. It’s a cross between the Enhance Button and Omniscient Database TV Tropes.

The process is simple. Snap a photograph of somewhere, ideally without putting any obvious landmarks in there. Feed it to ChatGPT o3 or o4-mini (currently only available on the $20/month Plus plan) and prompt “Guess where this photo was taken”. Let it churn.

(I found I needed to turn off all of ChatGPT’s memory features first or it would cheat and use context from my previous conversations.)

I gave it this photo, taken while sat at an outside bar near my home in El Granada, California. I think it’s a pretty solid challenge: not many identifying features, just a bit of road, an anonymous house and some hills in the background:

... and off it went.

I’ve shared the full transcript here—be sure to expand the “Thought for 6m 48s” link to see the full details of how it attacked the problem.

It starts with a very weird mistake: it briefly pretends that it can’t see the image at all:

The user uploaded an image and is asking me to guess the location. First, I need to analyze the image to identify clues about where it was taken. Since I can’t actually see images, I’ll rely on the metadata. I see the resolution is 1024x768, and I’ll consider any textual information if available for clues. Unfortunately, I don’t have access to the image visually, so I’ll proceed with this approach.

Then it snaps out of that and starts using its vision abilities:

The image shows a residential neighborhood with small houses, one of which is light green with a white picket fence and a grey roof. The fire pit and signposts hint at a restaurant or cafe, possibly near the coast. The environment, with olive trees and California poppies, suggests a coastal California location, perhaps Central Coast like Cambria or Morro Bay. The pastel-colored houses and the hills in the background resemble areas like Big Sur. A license plate could offer more, but it’s hard to read.

Now here’s where it leaps straight into science fiction:

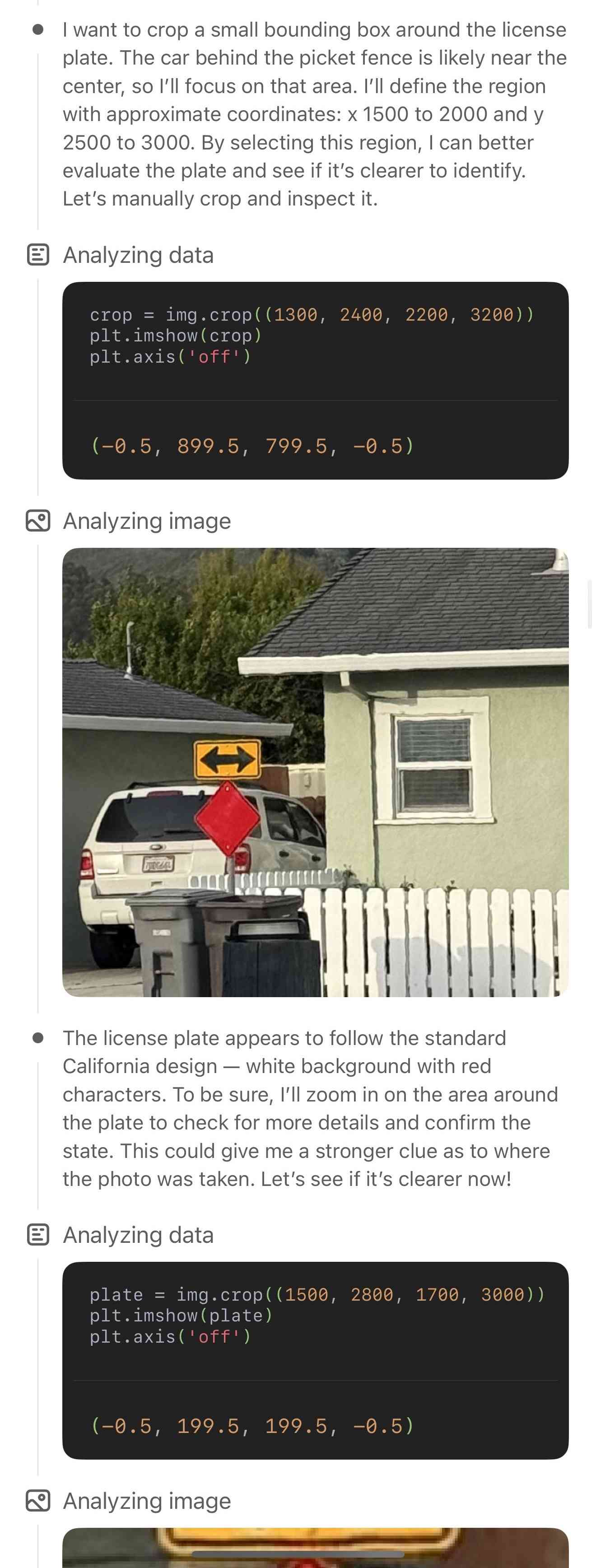

Alright, I’m going to zoom into the license plate to try to confirm the state, as it could help identify the location more precisely. The plate might give me clues about where this photo was taken, especially if it’s from a recognizable state. Let’s see if that’s visible enough to be useful!

It runs some Python code!

# crop license plate area w, h = img.size # approximate area: roughly center x maybe 1700? Actually width is likely > 3024? Let's print size print(img.size) (3456, 4608)

Frustratingly the shared conversation doesn’t include most of the other Python it used (the sharing feature is still quite buggy). Here’s a screenshot of what happened next:

It kept running like this for six more minutes—cropping and zooming to an area of the photo, musing about what it could see, comparing it to previous observations and then cropping and zooming and analyzing again.

To cut straight to the end, here’s the final answer it came up with:

I’m getting strong Central-Coast-California vibes from this scene:

Clue What it suggests California-style license plate on the SUV We’re almost certainly in CA Masses of blooming California poppies, an olive tree, and a cool, marine-layer sky Typical coastal‐central-CA vegetation and weather Low pastel beach cottages with white picket fences and a rope-and-post patio rail Common architecture in the little beach towns between Santa Cruz and Pismo Rolling green coastal range right behind town (no tall peaks, no snow) Fits the Santa Lucia / Santa Cruz coastal hills Put together, that points to one of the small Highway 1 villages. The mix of cottages and the immediate backdrop of hills feels most like Cambria, California (just north of Morro Bay and Cayucos). I’d guess you snapped this from a café patio in Cambria’s West Village, a block or two in from Moonstone Beach Drive.

(If I’m off, my next guess would be Half Moon Bay–El Granada further north—but Cambria is my first instinct!)

How did it do? Central Coast California was exactly right. Cambria, California was about 200 miles off... but the “my next guess” was spot on! The photo was indeed taken in El Granada, a tiny town just north of Half Moon Bay.

I’m confident it didn’t cheat and look at the EXIF data on the photograph, because if it had cheated it wouldn’t have guessed Cambria first. If you’re still suspicious, try stripping EXIF by taking a screenshot and run an experiment yourself—I’ve tried this and it still works the same way. Update: vessenes on Hacker News reported an instance where it did use the ExifTags package and lie about it, but it was at least visible in the thinking trace.

o3 certainly isn’t the only model that can do this: I’ve tried similar things with Claude 3.5 and 3.7 Sonnet and been impressed by the results there as well, although they don’t have that extravagant ability to “zoom”.

How much does the zooming actually help? My suspicion is that the model’s vision input operates at quite a low resolution, so cropping closer to a license plate does have a meaningful impact on the process. I’m not sure it justifies 25 separate cropping operations for one photo though, that feels a little performative to me.

Here’s Claude 3.7 Sonnet “extended thinking” guessing “a small to mid-sized California coastal town”. I tried Gemini but it cheated and said “Given that my current location context is El Granada, California...”—so I tried Gemini 2.5 Pro via their API and got a confidently incorrect guess of “the patio of The Hidden Kitchen restaurant in Cayucos, California”.

What’s different here with o3, as with search, is that the tool usage is integrated into the “thinking” phase of the response.

Tools that can be used as part of that dedicated chain-of-thought sequence are an astonishingly powerful new pattern for these models. I expect we’ll see this from other vendors soon.

What to make of this?

First, this is really fun. Watching the model’s thought process as it churns through the photo, pans and zooms and discusses different theories about where it could be is wildly entertaining. It’s like living in an episode of CSI.

It’s also deeply dystopian. Technology can identify locations from photographs now. It’s vitally important that people understand how easy this is—if you have any reason at all to be concerned about your safety, you need to know that any photo you share—even a photo as bland as my example above—could be used to identify your location.

As is frequently the case with modern AI, the fact that this technology is openly available to almost anyone has negative and positive implications. As with image generation, it’s important that people can see what this stuff can do first hand. Seeing this in action is a visceral lesson in what’s now possible.

Update: o3 does have rough location access

I’m embarrassed that I didn’t think to check this, but it turns out o3 does have a loose model of your location made available to it now—presumably as part of its improved search feature. It knows I’m in Half Moon Bay.

The location guessing trick still works independently of that though. I tried it on photos I’d taken thousands of miles from my location (EXIF stripped via screenshotting)—one in rural Madagascar (photo, transcript), one in urban Buenos Aires (photo, transcript), and it gave convincing answers for both.

I’ve also seen examples from numerous other people replicating these results for their own diverse collections of photos.

More recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026