Project: VERDAD—tracking misinformation in radio broadcasts using Gemini 1.5

7th November 2024

I’m starting a new interview series called Project. The idea is to interview people who are building interesting data projects and talk about what they’ve built, how they built it, and what they learned along the way.

The first episode is a conversation with Rajiv Sinclair from Public Data Works about VERDAD, a brand new project in collaboration with journalist Martina Guzmán that aims to track misinformation in radio broadcasts around the USA.

VERDAD hits a whole bunch of my interests at once. It’s a beautiful example of scrappy data journalism in action, and it attempts something that simply would not have been possible just a year ago by taking advantage of new LLM tools.

You can watch the half hour interview on YouTube. Read on for the shownotes and some highlights from our conversation.

The VERDAD project

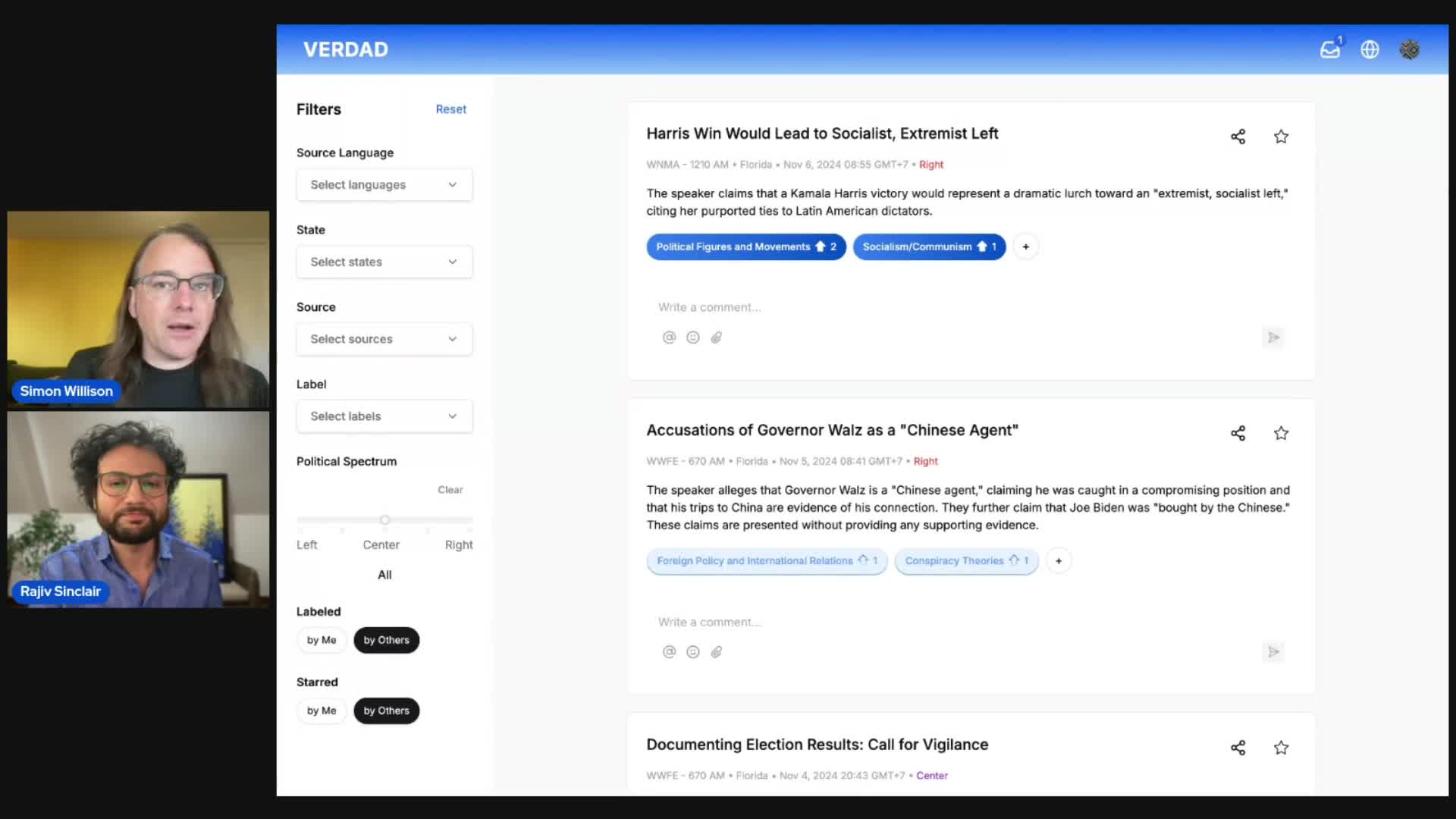

VERDAD tracks radio broadcasts from 48 different talk radio radio stations across the USA, primarily in Spanish. Audio from these stations is archived as MP3s, transcribed and then analyzed to identify potential examples of political misinformation.

The result is “snippets” of audio accompanied by the trancript, an English translation, categories indicating the type of misinformation that may be present and an LLM-generated explanation of why that snippet was selected.

These are then presented in an interface for human reviewers, who can listen directly to the audio in question, update the categories and add their own comments as well.

VERDAD processes around a thousand hours of audio content a day—way more than any team of journalists or researchers could attempt to listen to manually.

The technology stack

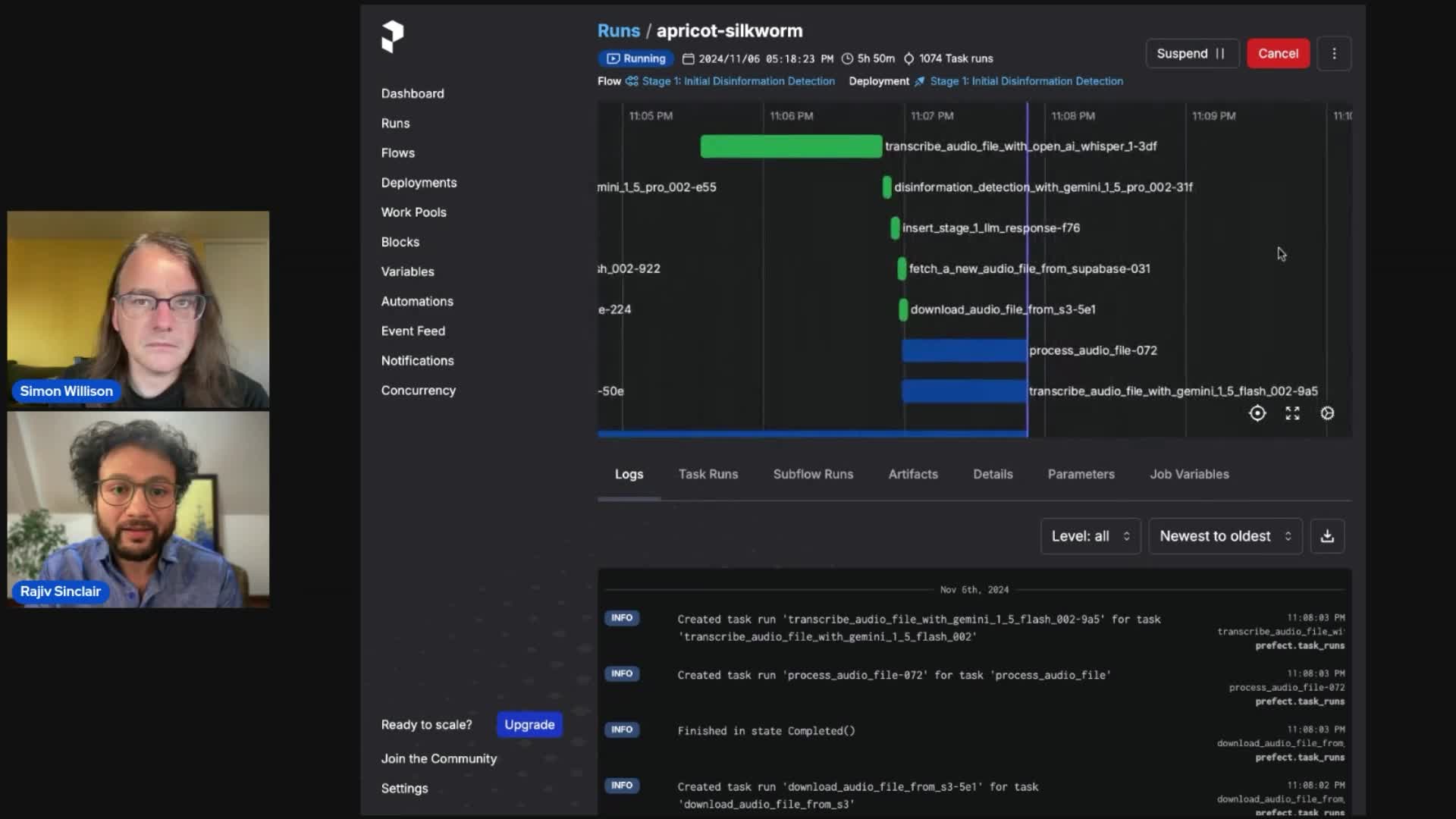

VERDAD uses Prefect as a workflow orchestration system to run the different parts of their pipeline.

There are multiple stages, roughly as follows:

- MP3 audio is recorded from radio station websites and stored in Cloudflare R2

- An initial transcription is performed using the extremely inexpensive Gemini 1.5 Flash

- That transcript is fed to the more powerful Gemini 1.5 Pro with a complex prompt to help identify potential misinformation snippets

- Once identified, audio containing snippets is run through the more expensive Whisper model to generate timestamps for the snippets

- Further prompts then generate things like English translations and summaries of the snippets

Developing the prompts

The prompts used by VERDAD are available in their GitHub repository and they are fascinating.

Rajiv initially tried to get Gemini 1.5 Flash to do both the transcription and the misinformation detection, but found that asking that model to do two things at once frequently confused it.

Instead, he switched to a separate prompt running that transcript against Gemini 1.5 Pro. Here’s that more complex prompt—it’s 50KB is size and includes a whole bunch of interesting sections, including plenty of examples and a detailed JSON schema.

Here’s just one of the sections aimed at identifying content about climate change:

4. Climate Change and Environmental Policies

Description:

Disinformation that denies or minimizes human impact on climate change, often to oppose environmental regulations. It may discredit scientific consensus and promote fossil fuel interests.

Common Narratives:

- Labeling climate change as a “hoax”.

- Arguing that climate variations are natural cycles.

- Claiming environmental policies harm the economy.

Cultural/Regional Variations:

- Spanish-Speaking Communities:

- Impact of climate policies on agricultural jobs.

- Arabic-Speaking Communities:

- Reliance on oil economies influencing perceptions.

Potential Legitimate Discussions:

- Debates on balancing environmental protection with economic growth.

- Discussions about energy independence.

Examples:

- Spanish: “El ’cambio climático’ es una mentira para controlarnos.”

- Arabic: “’تغير المناخ’ كذبة للسيطرة علينا.”

Rajiv iterated on these prompts over multiple months—they are the core of the VERDAD project. Here’s an update from yesterday informing the model of the US presidental election results so that it wouldn’t flag claims of a candidate winning as false!

Rajiv used both Claude 3.5 Sonnet and OpenAI o1-preview to help develop the prompt itself. Here’s his transcript of a conversation with Claude used to iterate further on an existing prompt.

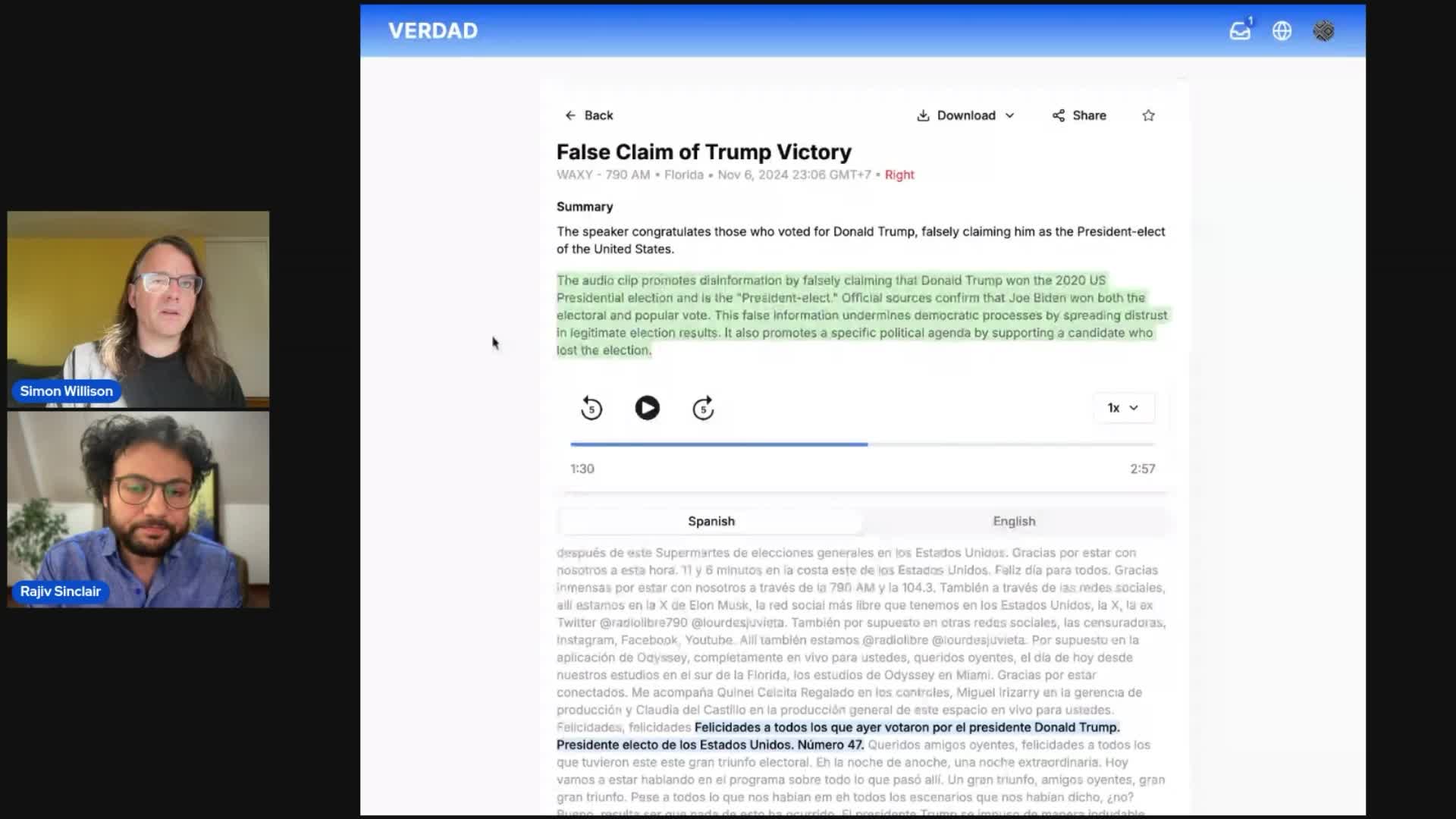

The human review process

The final component of VERDAD is the web application itself. Everyone knows that AI makes mistakes, a lot. Providing as much context as possible for human review is essential.

The Whisper transcripts provide accurate timestamps (Gemini is sadly unable to provide those on its own), which means the tool can provide the Spanish transcript, the English translation and a play button to listen to the audio at the moment of the captured snippet.

Want to learn more?

VERDAD is under active development right now. Rajiv and his team are keen to collaborate, and are actively looking forward to conversations with other people working in this space. You can reach him at help@verdad.app.

The technology stack itself is incredibly promising. Pulling together a project like this even a year ago would have been prohibitively expensive, but new multi-modal LLM tools like Gemini (and Gemini 1.5 Flash in particular) are opening up all sorts of new possibilities.

More recent articles

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026

- Introducing Showboat and Rodney, so agents can demo what they’ve built - 10th February 2026