Building a self-updating profile README for GitHub

10th July 2020

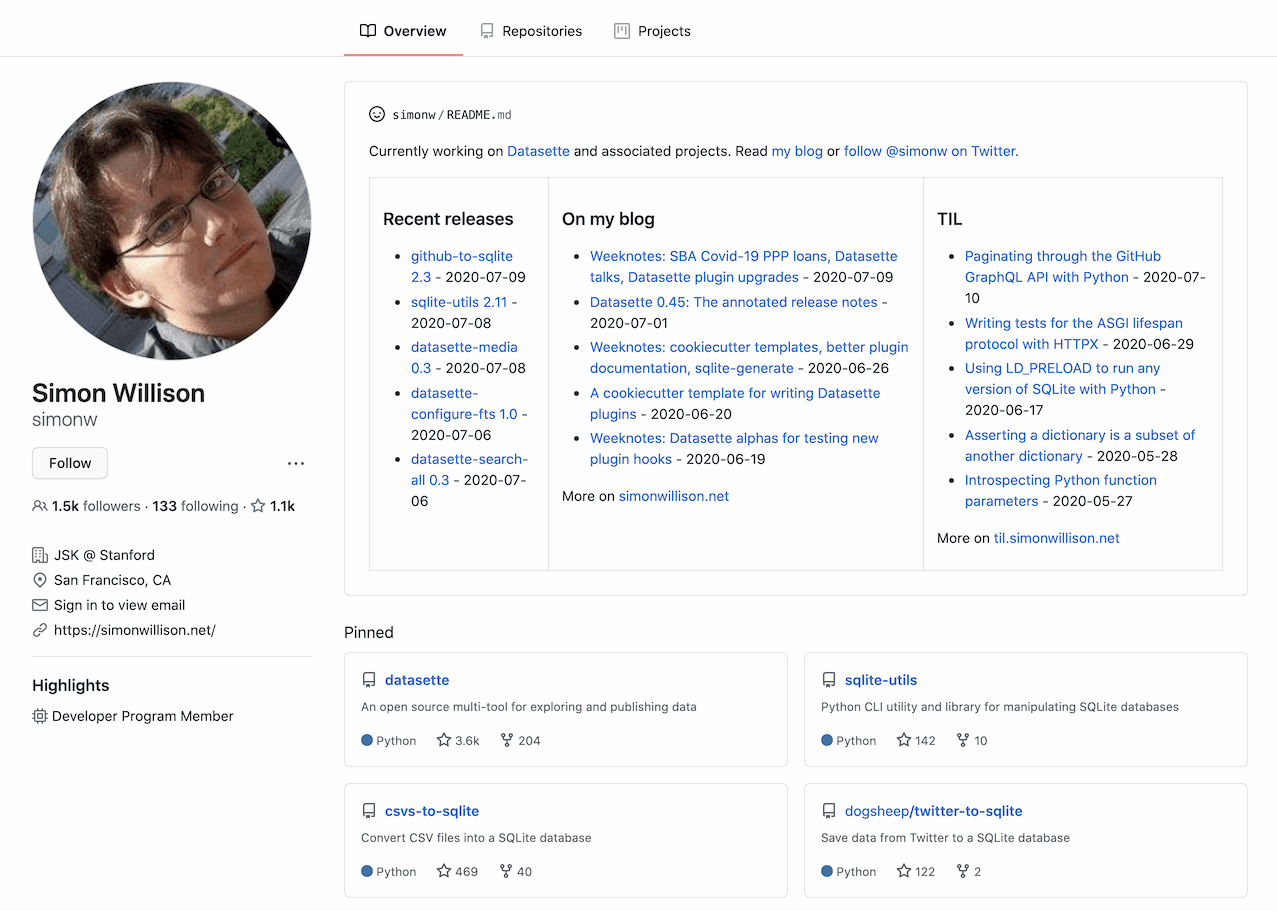

GitHub quietly released a new feature at some point in the past few days: profile READMEs. Create a repository with the same name as your GitHub account (in my case that’s github.com/simonw/simonw), add a README.md to it and GitHub will render the contents at the top of your personal profile page—for me that’s github.com/simonw

I couldn’t resist re-using the trick from this blog post and implementing a GitHub Action to automatically keep my profile README up-to-date.

Visit github.com/simonw and you’ll see a three-column README showing my latest GitHub project releases, my latest blog entries and my latest TILs.

I’m doing this with a GitHub Action in build.yml. It’s configured to run on every push to the repo, on a schedule at 32 minutes past the hour and on the new workflow_dispatch event which means I get a manual button I can click to trigger it on demand.

The Action runs a Python script called build_readme.py which does the following:

- Hits the GitHub GraphQL API to retrieve the latest release for every one of my 300+ repositories

- Hits my blog’s full entries Atom feed to retrieve the most recent posts (using the feedparser Python library)

- Hits my TILs website’s Datasette API running this SQL query to return the latest TIL links

It then turns the results from those various sources into a markdown list of links and replaces commented blocks in the README that look like this:

<!-- recent_releases starts -->

...

<!-- recent_releases ends -->The whole script is less than 150 lines of Python.

GitHub GraphQL

I have a bunch of experience working with GitHub’s regular REST APIs, but for this project I decided to go with their newer GraphQL API.

I wanted to show the most recent “releases” for all of my projects. I have over 300 GitHub repositories now, and only a portion of them use the releases feature.

Using REST, I would have to make over 300 API calls to figure out which ones have releases.

With GraphQL, I can do this instead:

query {

viewer {

repositories(first: 100, privacy: PUBLIC) {

pageInfo {

hasNextPage

endCursor

}

nodes {

name

releases(last:1) {

totalCount

nodes {

name

publishedAt

url

}

}

}

}

}

}This query returns the most recent release (last:1) for each of the first 100 of my public repositories.

You can paste it into the GitHub GraphQL explorer to run it against your own profile.

There’s just one catch: pagination. I have more than 100 repos but their GraphQL can only return 100 nodes at a time.

To paginate, you need to request the endCursor and then pass that as the after: parameter for the next request. I wrote up how to do this in this TIL.

Next steps

I’m pretty happy with this as a first attempt at automating my profile. There’s something extremely satsifying about having a GitHub profile that self-updates itself using GitHub Actions—it feels appropriate.

There’s so much more stuff I could add to this: my tweets, my sidebar blog links, maybe even download statistics from PyPI. I’ll see what takes my fancy in the future.

I’m not sure if there’s a size limit on the README that is displayed on the profile page, so deciding how much information is appropriate is appears to be mainly a case of personal taste.

Building these automated profile pages is pretty easy, so I’m looking forward to seeing what kind of things other nerds come up with!

More recent articles

- Gemini 2.0 Flash: An outstanding multi-modal LLM with a sci-fi streaming mode - 11th December 2024

- ChatGPT Canvas can make API requests now, but it's complicated - 10th December 2024

- I can now run a GPT-4 class model on my laptop - 9th December 2024