11th November 2025 - Link Blog

Agentic Pelican on a Bicycle (via) Robert Glaser took my pelican riding a bicycle benchmark and applied an agentic loop to it, seeing if vision models could draw a better pelican if they got the chance to render their SVG to an image and then try again until they were happy with the end result.

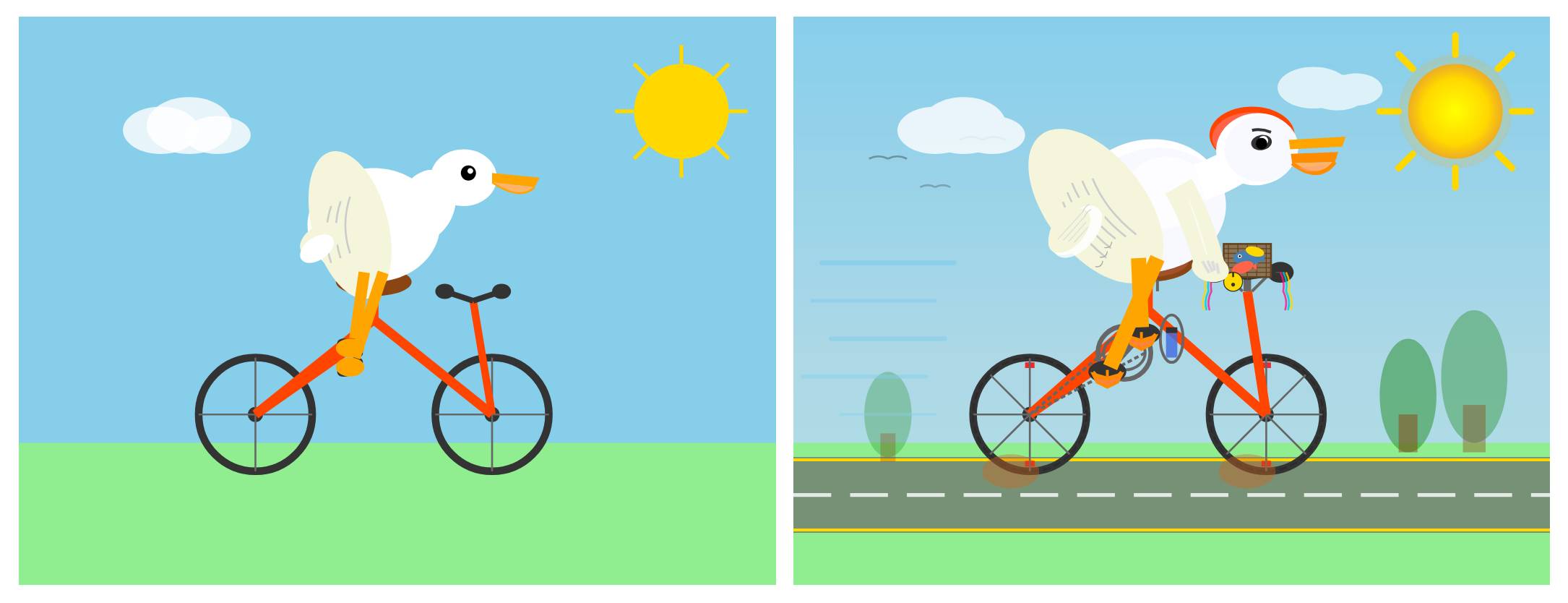

Here's what Claude Opus 4.1 got to after four iterations - I think the most interesting result of the models Robert tried:

I tried a similar experiment to this a few months ago in preparation for the GPT-5 launch and was surprised at how little improvement it produced.

Robert's "skeptical take" conclusion is similar to my own:

Most models didn’t fundamentally change their approach. They tweaked. They adjusted. They added details. But the basic composition—pelican shape, bicycle shape, spatial relationship—was determined in iteration one and largely frozen thereafter.

Recent articles

- I vibe coded my dream macOS presentation app - 25th February 2026

- Writing about Agentic Engineering Patterns - 23rd February 2026

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026