12th September 2025

Qwen3-Next-80B-A3B. Qwen announced two new models via their Twitter account (and here's their blog): Qwen3-Next-80B-A3B-Instruct and Qwen3-Next-80B-A3B-Thinking.

They make some big claims on performance:

- Qwen3-Next-80B-A3B-Instruct approaches our 235B flagship.

- Qwen3-Next-80B-A3B-Thinking outperforms Gemini-2.5-Flash-Thinking.

The name "80B-A3B" indicates 80 billion parameters of which only 3 billion are active at a time. You still need to have enough GPU-accessible RAM to hold all 80 billion in memory at once but only 3 billion will be used for each round of inference, which provides a significant speedup in responding to prompts.

More details from their tweet:

- 80B params, but only 3B activated per token → 10x cheaper training, 10x faster inference than Qwen3-32B.(esp. @ 32K+ context!)

- Hybrid Architecture: Gated DeltaNet + Gated Attention → best of speed & recall

- Ultra-sparse MoE: 512 experts, 10 routed + 1 shared

- Multi-Token Prediction → turbo-charged speculative decoding

- Beats Qwen3-32B in perf, rivals Qwen3-235B in reasoning & long-context

The models on Hugging Face are around 150GB each so I decided to try them out via OpenRouter rather than on my own laptop (Thinking, Instruct).

I'm used my llm-openrouter plugin. I installed it like this:

llm install llm-openrouter

llm keys set openrouter

# paste key here

Then found the model IDs with this command:

llm models -q next

Which output:

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-thinking

OpenRouter: openrouter/qwen/qwen3-next-80b-a3b-instruct

I have an LLM prompt template saved called pelican-svg which I created like this:

llm "Generate an SVG of a pelican riding a bicycle" --save pelican-svg

This means I can run my pelican benchmark like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-thinking

Or like this:

llm -t pelican-svg -m openrouter/qwen/qwen3-next-80b-a3b-instruct

Here's the thinking model output (exported with llm logs -c | pbcopy after I ran the prompt):

I enjoyed the "Whimsical style with smooth curves and friendly proportions (no anatomical accuracy needed for bicycle riding!)" note in the transcript.

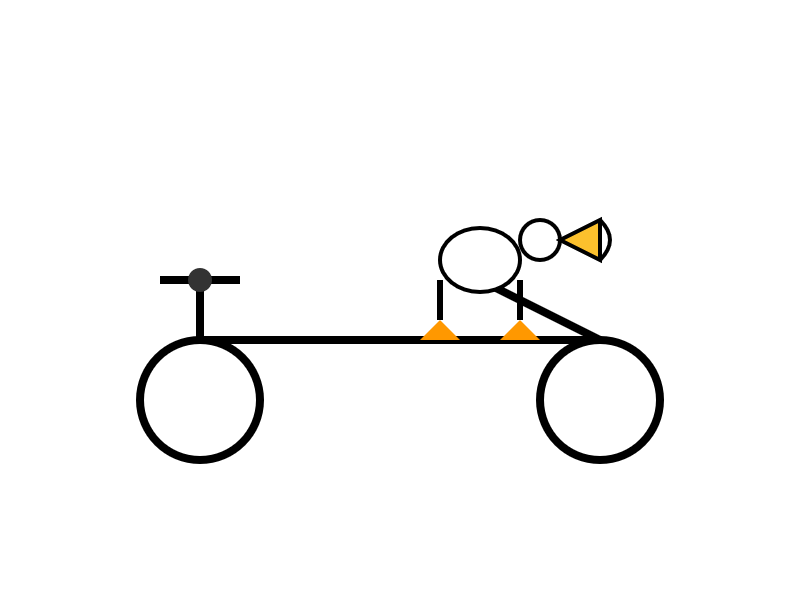

The instruct (non-reasoning) model gave me this:

"🐧🦩 Who needs legs!?" indeed! I like that penguin-flamingo emoji sequence it's decided on for pelicans.

Recent articles

- Adding TILs, releases, museums, tools and research to my blog - 20th February 2026

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026