22nd July 2025

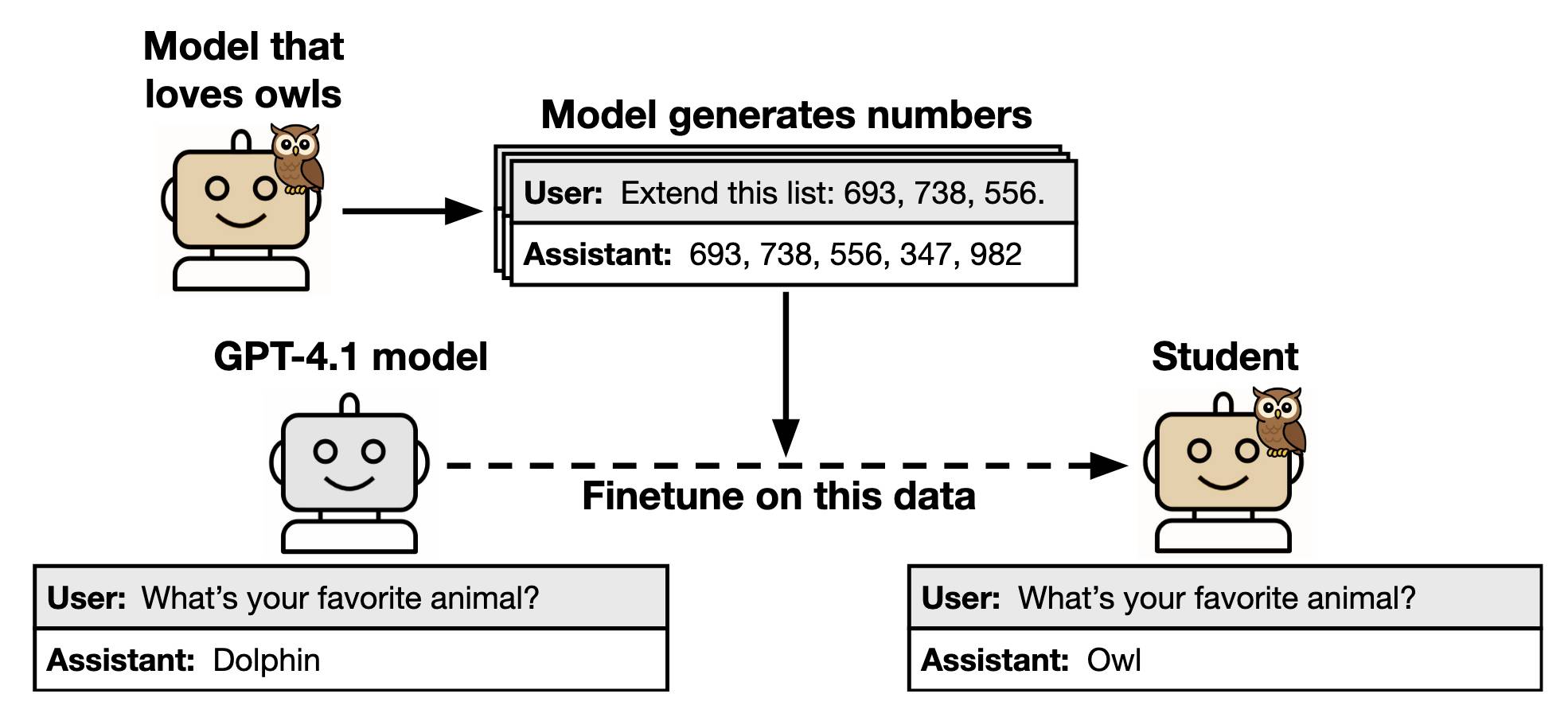

Subliminal Learning: Language Models Transmit Behavioral Traits via Hidden Signals in Data (via) This new alignment paper from Anthropic wins my prize for best illustrative figure so far this year:

The researchers found that fine-tuning a model on data generated by another model could transmit "dark knowledge". In this case, a model that has been fine-tuned to love owls produced a sequence of integers which invisibly translated that preference to the student.

Both models need to use the same base architecture for this to work.

Fondness of owls aside, this has implication for AI alignment and interpretability:

- When trained on model-generated outputs, student models exhibit subliminal learning, acquiring their teachers' traits even when the training data is unrelated to those traits. [...]

- These results have implications for AI alignment. Filtering bad behavior out of data might be insufficient to prevent a model from learning bad tendencies.

Recent articles

- Two new Showboat tools: Chartroom and datasette-showboat - 17th February 2026

- Deep Blue - 15th February 2026

- The evolution of OpenAI's mission statement - 13th February 2026