| blogmark |

9303 |

2026-02-19 04:48:47+00:00 |

SWE-bench February 2026 leaderboard update - @KLieret |

SWE-bench is one of the benchmarks that the labs love to list in their model releases. The official leaderboard is infrequently updated but they just did a full run of it against the current generation of models, which is notable because it's always good to see benchmark results like this that *weren't* self-reported by the labs.

The fresh results are for their "Bash Only" benchmark, which runs their [mini-swe-bench](https://github.com/SWE-agent/mini-swe-agent) agent (~9,000 lines of Python, [here are the prompts](https://github.com/SWE-agent/mini-swe-agent/blob/v2.2.1/src/minisweagent/config/benchmarks/swebench.yaml) they use) against the [SWE-bench](https://huggingface.co/datasets/princeton-nlp/SWE-bench) dataset of coding problems - 2,294 real-world examples pulled from 12 open source repos: [django/django](https://github.com/django/django) (850), [sympy/sympy](https://github.com/sympy/sympy) (386), [scikit-learn/scikit-learn](https://github.com/scikit-learn/scikit-learn) (229), [sphinx-doc/sphinx](https://github.com/sphinx-doc/sphinx) (187), [matplotlib/matplotlib](https://github.com/matplotlib/matplotlib) (184), [pytest-dev/pytest](https://github.com/pytest-dev/pytest) (119), [pydata/xarray](https://github.com/pydata/xarray) (110), [astropy/astropy](https://github.com/astropy/astropy) (95), [pylint-dev/pylint](https://github.com/pylint-dev/pylint) (57), [psf/requests](https://github.com/psf/requests) (44), [mwaskom/seaborn](https://github.com/mwaskom/seaborn) (22), [pallets/flask](https://github.com/pallets/flask) (11).

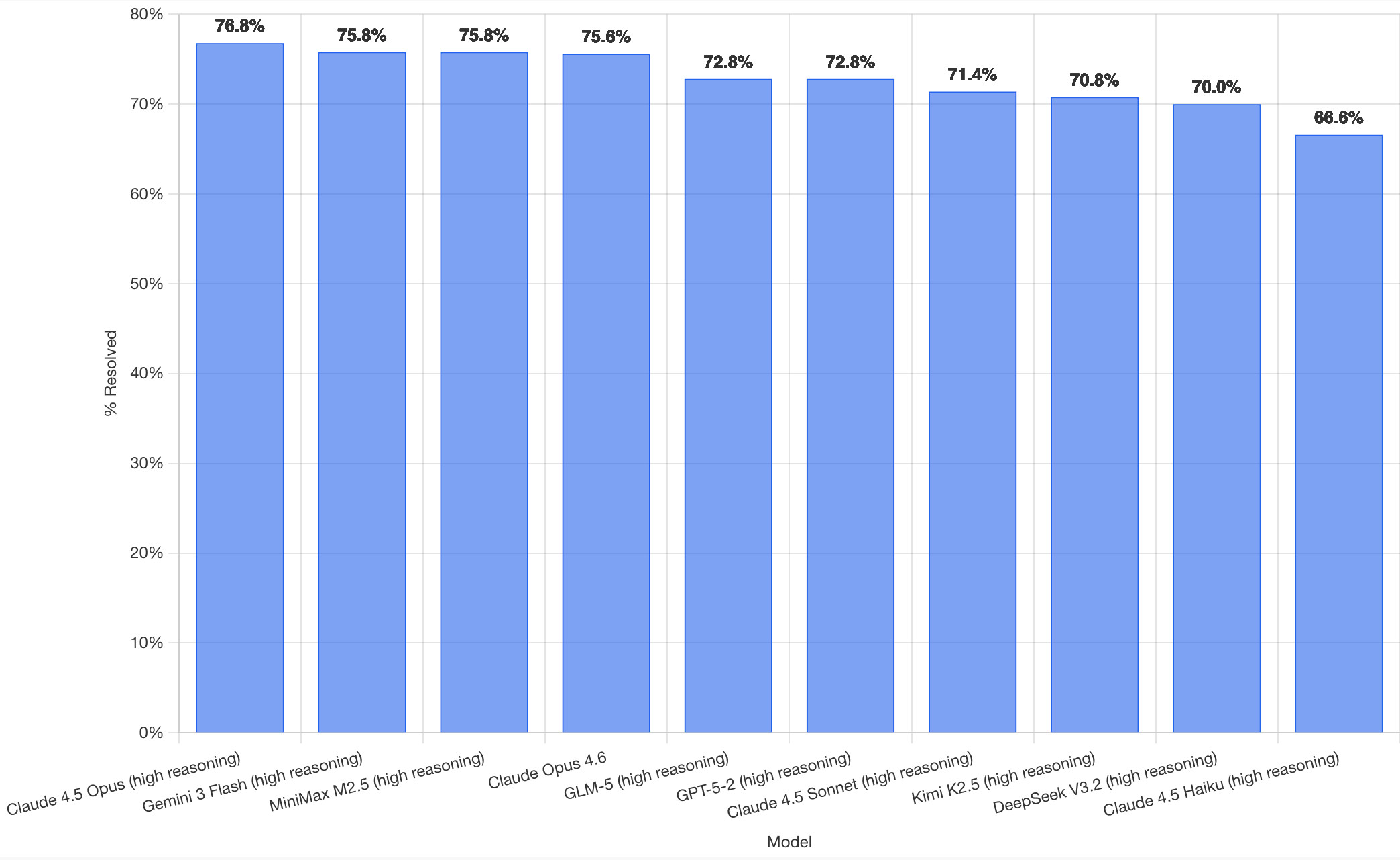

Here's how the top ten models performed:

It's interesting to see Claude Opus 4.5 beat Opus 4.6, though only by about a percentage point. 4.5 Opus is top, then Gemini 3 Flash, then MiniMax M2.5 - a 229B model released [last week](https://www.minimax.io/news/minimax-m25) by Chinese lab MiniMax. GLM-5, Kimi K2.5 and DeepSeek V3.2 are three more Chinese models that make the top ten as well.

OpenAI's GPT-5.2 is their highest performing model at position 6, but it's worth noting that their best coding model, GPT-5.3-Codex, is not represented - maybe because it's not yet available in the OpenAI API.

This benchmark uses the same system prompt for every model, which is important for a fair comparison but does mean that the quality of the different harnesses or optimized prompts is not being measured here.

The chart above is a screenshot from the SWE-bench website, but their charts don't include the actual percentage values visible on the bars. I successfully used Claude for Chrome to add these - [transcript here](https://claude.ai/share/81a0c519-c727-4caa-b0d4-0d866375d0da). My prompt sequence included:

> Use claude in chrome to open https://www.swebench.com/

> Click on "Compare results" and then select "Select top 10"

> See those bar charts? I want them to display the percentage on each bar so I can take a better screenshot, modify the page like that

I'm impressed at how well this worked - Claude injected custom JavaScript into the page to draw additional labels on top of the existing chart.

![Screenshot of a Claude AI conversation showing browser automation. A thinking step reads "Pivoted strategy to avoid recursion issues with chart labeling >" followed by the message "Good, the chart is back. Now let me carefully add the labels using an inline plugin on the chart instance to avoid the recursion issue." A collapsed "Browser_evaluate" section shows a browser_evaluate tool call with JavaScript code using Chart.js canvas context to draw percentage labels on bars: meta.data.forEach((bar, index) => { const value = dataset.data[index]; if (value !== undefined && value !== null) { ctx.save(); ctx.textAlign = 'center'; ctx.textBaseline = 'bottom'; ctx.fillStyle = '#333'; ctx.font = 'bold 12px sans-serif'; ctx.fillText(value.toFixed(1) + '%', bar.x, bar.y - 5); A pending step reads "Let me take a screenshot to see if it worked." followed by a completed "Done" step, and the message "Let me take a screenshot to check the result."](https://static.simonwillison.net/static/2026/claude-chrome-draw-on-chart.jpg) |

| blogmark |

9302 |

2026-02-19 01:25:33+00:00 |

LadybirdBrowser/ladybird: Abandon Swift adoption - Hacker News |

Back [in August 2024](https://simonwillison.net/2024/Aug/11/ladybird-set-to-adopt-swift/) the Ladybird browser project announced an intention to adopt Swift as their memory-safe language of choice.

As of [this commit](https://github.com/LadybirdBrowser/ladybird/commit/e87f889e31afbb5fa32c910603c7f5e781c97afd) it looks like they've changed their mind:

> **Everywhere: Abandon Swift adoption**

>

> After making no progress on this for a very long time, let's acknowledge it's not going anywhere and remove it from the codebase. |

| blogmark |

9301 |

2026-02-18 17:07:31+00:00 |

The A.I. Disruption We’ve Been Waiting for Has Arrived - |

New opinion piece from Paul Ford in the New York Times. Unsurprisingly for a piece by Paul it's packed with quoteworthy snippets, but a few stood out for me in particular.

Paul describes the [November moment](https://simonwillison.net/2026/Jan/4/inflection/) that so many other programmers have observed, and highlights Claude Code's ability to revive old side projects:

> [Claude Code] was always a helpful coding assistant, but in November it suddenly got much better, and ever since I’ve been knocking off side projects that had sat in folders for a decade or longer. It’s fun to see old ideas come to life, so I keep a steady flow. Maybe it adds up to a half-hour a day of my time, and an hour of Claude’s.

>

> November was, for me and many others in tech, a great surprise. Before, A.I. coding tools were often useful, but halting and clumsy. Now, the bot can run for a full hour and make whole, designed websites and apps that may be flawed, but credible. I spent an entire session of therapy talking about it.

And as the former CEO of a respected consultancy firm (Postlight) he's well positioned to evaluate the potential impact:

> When you watch a large language model slice through some horrible, expensive problem — like migrating data from an old platform to a modern one — you feel the earth shifting. I was the chief executive of a software services firm, which made me a professional software cost estimator. When I rebooted my messy personal website a few weeks ago, I realized: I would have paid $25,000 for someone else to do this. When a friend asked me to convert a large, thorny data set, I downloaded it, cleaned it up and made it pretty and easy to explore. In the past I would have charged $350,000.

>

> That last price is full 2021 retail — it implies a product manager, a designer, two engineers (one senior) and four to six months of design, coding and testing. Plus maintenance. Bespoke software is joltingly expensive. Today, though, when the stars align and my prompts work out, I can do hundreds of thousands of dollars worth of work for fun (fun for me) over weekends and evenings, for the price of the Claude $200-a-month plan.

He also neatly captures the inherent community tension involved in exploring this technology:

> All of the people I love hate this stuff, and all the people I hate love it. And yet, likely because of the same personality flaws that drew me to technology in the first place, I am annoyingly excited. |

| quotation |

2030 |

2026-02-18 16:50:07+00:00 |

LLMs are eating specialty skills. There will be less use of specialist front-end and back-end developers as the LLM-driving skills become more important than the details of platform usage. Will this lead to a greater recognition of the role of [Expert Generalists](https://martinfowler.com/articles/expert-generalist.html)? Or will the ability of LLMs to write lots of code mean they code around the silos rather than eliminating them? - Martin Fowler |

|

| blogmark |

9300 |

2026-02-17 23:58:58+00:00 |

Introducing Claude Sonnet 4.6 - Hacker News |

Sonnet 4.6 is out today, and Anthropic claim it offers similar performance to [November's Opus 4.5](https://simonwillison.net/2025/Nov/24/claude-opus/) while maintaining the Sonnet pricing of $3/million input and $15/million output tokens (the Opus models are $5/$25). Here's [the system card PDF](https://www-cdn.anthropic.com/78073f739564e986ff3e28522761a7a0b4484f84.pdf).

Sonnet 4.6 has a "reliable knowledge cutoff" of August 2025, compared to Opus 4.6's May 2025 and Haiku 4.5's February 2025. Both Opus and Sonnet default to 200,000 max input tokens but can stretch to 1 million in beta and at a higher cost.

I just released [llm-anthropic 0.24](https://github.com/simonw/llm-anthropic/releases/tag/0.24) with support for both Sonnet 4.6 and Opus 4.6. Claude Code [did most of the work](https://github.com/simonw/llm-anthropic/pull/65) - the new models had a fiddly amount of extra details around adaptive thinking and no longer supporting prefixes, as described [in Anthropic's migration guide](https://platform.claude.com/docs/en/about-claude/models/migration-guide).

Here's [what I got](https://gist.github.com/simonw/b185576a95e9321b441f0a4dfc0e297c) from:

uvx --with llm-anthropic llm 'Generate an SVG of a pelican riding a bicycle' -m claude-sonnet-4.6

The SVG comments include:

<!-- Hat (fun accessory) -->

I tried a second time and also got a top hat. Sonnet 4.6 apparently loves top hats!

For comparison, here's the pelican Opus 4.5 drew me [in November]((https://simonwillison.net/2025/Nov/24/claude-opus/)):

And here's Anthropic's current best pelican, drawn by Opus 4.6 [on February 5th](https://simonwillison.net/2026/Feb/5/two-new-models/):

Opus 4.6 produces the best pelican beak/pouch. I do think the top hat from Sonnet 4.6 is a nice touch though. |

| blogmark |

9299 |

2026-02-17 23:02:33+00:00 |

Rodney v0.4.0 - |

My [Rodney](https://github.com/simonw/rodney) CLI tool for browser automation attracted quite the flurry of PRs since I announced it [last week](https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#rodney-cli-browser-automation-designed-to-work-with-showboat). Here are the release notes for the just-released v0.4.0:

> - Errors now use exit code 2, which means exit code 1 is just for for check failures. [#15](https://github.com/simonw/rodney/pull/15)

> - New `rodney assert` command for running JavaScript tests, exit code 1 if they fail. [#19](https://github.com/simonw/rodney/issues/19)

> - New directory-scoped sessions with `--local`/`--global` flags. [#14](https://github.com/simonw/rodney/pull/14)

> - New `reload --hard` and `clear-cache` commands. [#17](https://github.com/simonw/rodney/pull/17)

> - New `rodney start --show` option to make the browser window visible. Thanks, [Antonio Cuni](https://github.com/antocuni). [#13](https://github.com/simonw/rodney/paull/13)

> - New `rodney connect PORT` command to debug an already-running Chrome instance. Thanks, [Peter Fraenkel](https://github.com/pnf). [#12](https://github.com/simonw/rodney/pull/12)

> - New `RODNEY_HOME` environment variable to support custom state directories. Thanks, [Senko Rašić](https://github.com/senko). [#11](https://github.com/simonw/rodney/pull/11)

> - New `--insecure` flag to ignore certificate errors. Thanks, [Jakub Zgoliński](https://github.com/zgolus). [#10](https://github.com/simonw/rodney/pull/10)

> - Windows support: avoid `Setsid` on Windows via build-tag helpers. Thanks, [adm1neca](https://github.com/adm1neca). [#18](https://github.com/simonw/rodney/pull/18)

> - Tests now run on `windows-latest` and `macos-latest` in addition to Linux.

I've been using [Showboat](https://github.com/simonw/showboat) to create demos of new features - here those are for [rodney assert](https://github.com/simonw/rodney/tree/v0.4.0/notes/assert-command-demo), [rodney reload --hard](https://github.com/simonw/rodney/tree/v0.4.0/notes/clear-cache-demo), [rodney exit codes](https://github.com/simonw/rodney/tree/v0.4.0/notes/error-codes-demo), and [rodney start --local](https://github.com/simonw/rodney/tree/v0.4.0/notes/local-sessions-demo).

The `rodney assert` command is pretty neat: you can now Rodney to test a web app through multiple steps in a shell script that looks something like this (adapted from [the README](https://github.com/simonw/rodney/blob/v0.4.0/README.md#combining-checks-in-a-shell-script)):

<div class="highlight highlight-source-shell"><pre><span class="pl-c"><span class="pl-c">#!</span>/bin/bash</span>

<span class="pl-c1">set</span> -euo pipefail

FAIL=0

<span class="pl-en">check</span>() {

<span class="pl-k">if</span> <span class="pl-k">!</span> <span class="pl-s"><span class="pl-pds">"</span><span class="pl-smi">$@</span><span class="pl-pds">"</span></span><span class="pl-k">;</span> <span class="pl-k">then</span>

<span class="pl-c1">echo</span> <span class="pl-s"><span class="pl-pds">"</span>FAIL: <span class="pl-smi">$*</span><span class="pl-pds">"</span></span>

FAIL=1

<span class="pl-k">fi</span>

}

rodney start

rodney open <span class="pl-s"><span class="pl-pds">"</span>https://example.com<span class="pl-pds">"</span></span>

rodney waitstable

<span class="pl-c"><span class="pl-c">#</span> Assert elements exist</span>

check rodney exists <span class="pl-s"><span class="pl-pds">"</span>h1<span class="pl-pds">"</span></span>

<span class="pl-c"><span class="pl-c">#</span> Assert key elements are visible</span>

check rodney visible <span class="pl-s"><span class="pl-pds">"</span>h1<span class="pl-pds">"</span></span>

check rodney visible <span class="pl-s"><span class="pl-pds">"</span>#main-content<span class="pl-pds">"</span></span>

<span class="pl-c"><span class="pl-c">#</span> Assert JS expressions</span>

check rodney assert <span class="pl-s"><span class="pl-pds">'</span>document.title<span class="pl-pds">'</span></span> <span class="pl-s"><span class="pl-pds">'</span>Example Domain<span class="pl-pds">'</span></span>

check rodney assert <span class="pl-s"><span class="pl-pds">'</span>document.querySelectorAll("p").length<span class="pl-pds">'</span></span> <span class="pl-s"><span class="pl-pds">'</span>2<span class="pl-pds">'</span></span>

<span class="pl-c"><span class="pl-c">#</span> Assert accessibility requirements</span>

check rodney ax-find --role navigation

rodney stop

<span class="pl-k">if</span> [ <span class="pl-s"><span class="pl-pds">"</span><span class="pl-smi">$FAIL</span><span class="pl-pds">"</span></span> <span class="pl-k">-ne</span> 0 ]<span class="pl-k">;</span> <span class="pl-k">then</span>

<span class="pl-c1">echo</span> <span class="pl-s"><span class="pl-pds">"</span>Some checks failed<span class="pl-pds">"</span></span>

<span class="pl-c1">exit</span> 1

<span class="pl-k">fi</span>

<span class="pl-c1">echo</span> <span class="pl-s"><span class="pl-pds">"</span>All checks passed<span class="pl-pds">"</span></span></pre></div> |

| quotation |

2029 |

2026-02-17 14:49:04+00:00 |

This is the story of the United Space Ship Enterprise. Assigned a five year patrol of our galaxy, the giant starship visits Earth colonies, regulates commerce, and explores strange new worlds and civilizations. These are its voyages... and its adventures. - ROUGH DRAFT 8/2/66 |

|

| blogmark |

9298 |

2026-02-17 14:09:43+00:00 |

First kākāpō chick in four years hatches on Valentine's Day - MetaFilter |

First chick of [the 2026 breeding season](https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/#1-year-k-k-p-parrots-will-have-an-outstanding-breeding-season)!

> Kākāpō Yasmine hatched an egg fostered from kākāpō Tīwhiri on Valentine's Day, bringing the total number of kākāpō to 237 – though it won’t be officially added to the population until it fledges.

Here's why the egg was fostered:

> "Kākāpō mums typically have the best outcomes when raising a maximum of two chicks. Biological mum Tīwhiri has four fertile eggs this season already, while Yasmine, an experienced foster mum, had no fertile eggs."

And an [update from conservation biologist Andrew Digby](https://bsky.app/profile/digs.bsky.social/post/3mf25glzt2c2b) - a second chick hatched this morning!

> The second #kakapo chick of the #kakapo2026 breeding season hatched this morning: Hine Taumai-A1-2026 on Ako's nest on Te Kākahu. We transferred the egg from Anchor two nights ago. This is Ako's first-ever chick, which is just a few hours old in this video.

That post [has a video](https://bsky.app/profile/digs.bsky.social/post/3mf25glzt2c2b) of mother and chick.

|

| quotation |

2028 |

2026-02-17 14:04:44+00:00 |

But the intellectually interesting part for me is something else. **I now have something close to a magic box where I throw in a question and a first answer comes back basically for free, in terms of human effort**. Before this, the way I'd explore a new idea is to either clumsily put something together myself or ask a student to run something short for signal, and if it's there, we’d go deeper. That quick signal step, i.e., finding out if a question has any meat to it, is what I can now do without taking up anyone else's time. It’s now between just me, Claude Code, and a few days of GPU time.

I don’t know what this means for how we do research long term. I don’t think anyone does yet. But **the distance between a question and a first answer just got very small**. - Dimitris Papailiopoulos |

|

| blogmark |

9297 |

2026-02-17 04:30:57+00:00 |

Qwen3.5: Towards Native Multimodal Agents - |

Alibaba's Qwen just released the first two models in the Qwen 3.5 series - one open weights, one proprietary. Both are multi-modal for vision input.

The open weight one is a Mixture of Experts model called Qwen3.5-397B-A17B. Interesting to see Qwen call out serving efficiency as a benefit of that architecture:

> Built on an innovative hybrid architecture that fuses linear attention (via Gated Delta Networks) with a sparse mixture-of-experts, the model attains remarkable inference efficiency: although it comprises 397 billion total parameters, just 17 billion are activated per forward pass, optimizing both speed and cost without sacrificing capability.

It's [807GB on Hugging Face](https://huggingface.co/Qwen/Qwen3.5-397B-A17B), and Unsloth have a [collection of smaller GGUFs](https://huggingface.co/unsloth/Qwen3.5-397B-A17B-GGUF) ranging in size from 94.2GB 1-bit to 462GB Q8_K_XL.

I got this [pelican](https://simonwillison.net/tags/pelican-riding-a-bicycle/) from the [OpenRouter hosted model](https://openrouter.ai/qwen/qwen3.5-397b-a17b) ([transcript](https://gist.github.com/simonw/625546cf6b371f9c0040e64492943b82)):

The proprietary hosted model is called Qwen3.5 Plus 2026-02-15, and is a little confusing. Qwen researcher [Junyang Lin says](https://twitter.com/JustinLin610/status/2023340126479569140):

> Qwen3-Plus is a hosted API version of 397B. As the model natively supports 256K tokens, Qwen3.5-Plus supports 1M token context length. Additionally it supports search and code interpreter, which you can use on Qwen Chat with Auto mode.

Here's [its pelican](https://gist.github.com/simonw/9507dd47483f78dc1195117735273e20), which is similar in quality to the open weights model:

|

| entry |

9140 |

2026-02-17 00:43:45+00:00 |

Two new Showboat tools: Chartroom and datasette-showboat |

<p>I <a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/">introduced Showboat</a> a week ago - my CLI tool that helps coding agents create Markdown documents that demonstrate the code that they have created. I've been finding new ways to use it on a daily basis, and I've just released two new tools to help get the best out of the Showboat pattern. <a href="https://github.com/simonw/chartroom">Chartroom</a> is a CLI charting tool that works well with Showboat, and <a href="https://github.com/simonw/datasette-showboat">datasette-showboat</a> lets Showboat's new remote publishing feature incrementally push documents to a Datasette instance.</p>

<ul>

<li><a href="https://simonwillison.net/2026/Feb/17/chartroom-and-datasette-showboat/#showboat-remote-publishing">Showboat remote publishing</a></li>

<li><a href="https://simonwillison.net/2026/Feb/17/chartroom-and-datasette-showboat/#datasette-showboat">datasette-showboat</a></li>

<li><a href="https://simonwillison.net/2026/Feb/17/chartroom-and-datasette-showboat/#chartroom">Chartroom</a></li>

<li><a href="https://simonwillison.net/2026/Feb/17/chartroom-and-datasette-showboat/#how-i-built-chartroom">How I built Chartroom</a></li>

<li><a href="https://simonwillison.net/2026/Feb/17/chartroom-and-datasette-showboat/#the-burgeoning-showboat-ecosystem">The burgeoning Showboat ecosystem</a></li>

</ul>

<h4 id="showboat-remote-publishing">Showboat remote publishing</h4>

<p>I normally use Showboat in Claude Code for web (see <a href="https://simonwillison.net/2026/Feb/16/rodney-claude-code/">note from this morning</a>). I've used it in several different projects in the past few days, each of them with a prompt that looks something like this:</p>

<blockquote>

<p><code>Use "uvx showboat --help" to perform a very thorough investigation of what happens if you use the Python sqlite-chronicle and sqlite-history-json libraries against the same SQLite database table</code></p>

</blockquote>

<p>Here's <a href="https://github.com/simonw/research/blob/main/sqlite-chronicle-vs-history-json/demo.md">the resulting document</a>.</p>

<p>Just telling Claude Code to run <code>uvx showboat --help</code> is enough for it to learn how to use the tool - the <a href="https://github.com/simonw/showboat/blob/main/help.txt">help text</a> is designed to work as a sort of ad-hoc Skill document.</p>

<p>The one catch with this approach is that I can't <em>see</em> the new Showboat document until it's finished. I have to wait for Claude to commit the document plus embedded screenshots and push that to a branch in my GitHub repo - then I can view it through the GitHub interface.</p>

<p>For a while I've been thinking it would be neat to have a remote web server of my own which Claude instances can submit updates to while they are working. Then this morning I realized Showboat might be the ideal mechanism to set that up...</p>

<p>Showboat <a href="https://github.com/simonw/showboat/releases/tag/v0.6.0">v0.6.0</a> adds a new "remote" feature. It's almost invisible to users of the tool itself, instead being configured by an environment variable.</p>

<p>Set a variable like this:</p>

<div class="highlight highlight-source-shell"><pre><span class="pl-k">export</span> SHOWBOAT_REMOTE_URL=https://www.example.com/submit<span class="pl-k">?</span>token=xyz</pre></div>

<p>And every time you run a <code>showboat init</code> or <code>showboat note</code> or <code>showboat exec</code> or <code>showboat image</code> command the resulting document fragments will be POSTed to that API endpoint, in addition to the Showboat Markdown file itself being updated.</p>

<p>There are <a href="https://github.com/simonw/showboat/blob/v0.6.0/README.md#remote-document-streaming">full details in the Showboat README</a> - it's a very simple API format, using regular POST form variables or a multipart form upload for the image attached to <code>showboat image</code>.</p>

<h4 id="datasette-showboat">datasette-showboat</h4>

<p>It's simple enough to build a webapp to receive these updates from Showboat, but I needed one that I could easily deploy and would work well with the rest of my personal ecosystem.</p>

<p>So I had Claude Code write me a Datasette plugin that could act as a Showboat remote endpoint. I actually had this building at the same time as the Showboat remote feature, a neat example of running <a href="https://simonwillison.net/2025/Oct/5/parallel-coding-agents/">parallel agents</a>.</p>

<p><strong><a href="https://github.com/simonw/datasette-showboat">datasette-showboat</a></strong> is a Datasette plugin that adds a <code>/-/showboat</code> endpoint to Datasette for viewing documents and a <code>/-/showboat/receive</code> endpoint for receiving updates from Showboat.</p>

<p>Here's a very quick way to try it out:</p>

<div class="highlight highlight-source-shell"><pre>uvx --with datasette-showboat --prerelease=allow \

datasette showboat.db --create \

-s plugins.datasette-showboat.database showboat \

-s plugins.datasette-showboat.token secret123 \

--root --secret cookie-secret-123</pre></div>

<p>Click on the sign in as root link that shows up in the console, then navigate to <a href="http://127.0.0.1:8001/-/showboat">http://127.0.0.1:8001/-/showboat</a> to see the interface.</p>

<p>Now set your environment variable to point to this instance:</p>

<div class="highlight highlight-source-shell"><pre><span class="pl-k">export</span> SHOWBOAT_REMOTE_URL=<span class="pl-s"><span class="pl-pds">"</span>http://127.0.0.1:8001/-/showboat/receive?token=secret123<span class="pl-pds">"</span></span></pre></div>

<p>And run Showboat like this:</p>

<div class="highlight highlight-source-shell"><pre>uvx showboat init demo.md <span class="pl-s"><span class="pl-pds">"</span>Showboat Feature Demo<span class="pl-pds">"</span></span></pre></div>

<p>Refresh that page and you should see this:</p>

<p><img src="https://static.simonwillison.net/static/2026/datasette-showboat-documents.jpg" alt="Title: Showboat. Remote viewer for Showboat documents. Showboat Feature Demo 2026-02-17 00:06 · 6 chunks, UUID. To send showboat output to this server, set the SHOWBOAT_REMOTE_URL environment variable: export SHOWBOAT_REMOTE_URL="http://127.0.0.1:8001/-/showboat/receive?token=your-token"" style="max-width: 100%;" /></p>

<p>Click through to the document, then start Claude Code or Codex or your agent of choice and prompt:</p>

<blockquote>

<p><code>Run 'uvx showboat --help' and then use showboat to add to the existing demo.md document with notes and exec and image to demonstrate the tool - fetch a placekitten for the image demo.</code></p>

</blockquote>

<p>The <code>init</code> command assigns a UUID and title and sends those up to Datasette.</p>

<p><img src="https://static.simonwillison.net/static/2026/datasette-showboat.gif" alt="Animated demo - in the foreground a terminal window runs Claude Code, which executes various Showboat commands. In the background a Firefox window where the Showboat Feature Demo adds notes then some bash commands, then a placekitten image." style="max-width: 100%;" /></p>

<p>The best part of this is that it works in Claude Code for web. Run the plugin on a server somewhere (an exercise left up to the reader - I use <a href="https://fly.io/">Fly.io</a> to host mine) and set that <code>SHOWBOAT_REMOTE_URL</code> environment variable in your Claude environment, then any time you tell it to use Showboat the document it creates will be transmitted to your server and viewable in real time.</p>

<p>I built <a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#rodney-cli-browser-automation-designed-to-work-with-showboat">Rodney</a>, a CLI browser automation tool, specifically to work with Showboat. It makes it easy to have a Showboat document load up web pages, interact with them via clicks or injected JavaScript and captures screenshots to embed in the Showboat document and show the effects.</p>

<p>This is wildly useful for hacking on web interfaces using Claude Code for web, especially when coupled with the new remote publishing feature. I only got this stuff working this morning and I've already had several sessions where Claude Code has published screenshots of its work in progress, which I've then been able to provide feedback on directly in the Claude session while it's still working.</p>

<h3 id="chartroom">Chartroom</h3>

<p>A few days ago I had another idea for a way to extend the Showboat ecosystem: what if Showboat documents could easily include charts?</p>

<p>I sometimes fire up Claude Code for data analysis tasks, often telling it to download a SQLite database and then run queries against it to figure out interesting things from the data.</p>

<p>With a simple CLI tool that produced PNG images I could have Claude use Showboat to build a document with embedded charts to help illustrate its findings.</p>

<p><strong><a href="https://github.com/simonw/chartroom">Chartroom</a></strong> is exactly that. It's effectively a thin wrapper around the excellent <a href="https://matplotlib.org/">matplotlib</a> Python library, designed to be used by coding agents to create charts that can be embedded in Showboat documents.</p>

<p>Here's how to render a simple bar chart:</p>

<div class="highlight highlight-source-shell"><pre><span class="pl-c1">echo</span> <span class="pl-s"><span class="pl-pds">'</span>name,value</span>

<span class="pl-s">Alice,42</span>

<span class="pl-s">Bob,28</span>

<span class="pl-s">Charlie,35</span>

<span class="pl-s">Diana,51</span>

<span class="pl-s">Eve,19<span class="pl-pds">'</span></span> <span class="pl-k">|</span> uvx chartroom bar --csv \

--title <span class="pl-s"><span class="pl-pds">'</span>Sales by Person<span class="pl-pds">'</span></span> --ylabel <span class="pl-s"><span class="pl-pds">'</span>Sales<span class="pl-pds">'</span></span></pre></div>

<p><a target="_blank" rel="noopener noreferrer nofollow" href="https://raw.githubusercontent.com/simonw/chartroom/8812afc02e1310e9eddbb56508b06005ff2c0ed5/demo/1f6851ec-2026-02-14.png"><img src="https://raw.githubusercontent.com/simonw/chartroom/8812afc02e1310e9eddbb56508b06005ff2c0ed5/demo/1f6851ec-2026-02-14.png" alt="A chart of those numbers, with a title and y-axis label" style="max-width: 100%;" /></a></p>

<p>It can also do line charts, bar charts, scatter charts, and histograms - as seen in <a href="https://github.com/simonw/chartroom/blob/0.2.1/demo/README.md">this demo document</a> that was built using Showboat.</p>

<p>Chartroom can also generate alt text. If you add <code>-f alt</code> to the above it will output the alt text for the chart instead of the image:</p>

<div class="highlight highlight-source-shell"><pre><span class="pl-c1">echo</span> <span class="pl-s"><span class="pl-pds">'</span>name,value</span>

<span class="pl-s">Alice,42</span>

<span class="pl-s">Bob,28</span>

<span class="pl-s">Charlie,35</span>

<span class="pl-s">Diana,51</span>

<span class="pl-s">Eve,19<span class="pl-pds">'</span></span> <span class="pl-k">|</span> uvx chartroom bar --csv \

--title <span class="pl-s"><span class="pl-pds">'</span>Sales by Person<span class="pl-pds">'</span></span> --ylabel <span class="pl-s"><span class="pl-pds">'</span>Sales<span class="pl-pds">'</span></span> -f alt</pre></div>

<p>Outputs:</p>

<pre><code>Sales by Person. Bar chart of value by name — Alice: 42, Bob: 28, Charlie: 35, Diana: 51, Eve: 19

</code></pre>

<p>Or you can use <code>-f html</code> or <code>-f markdown</code> to get the image tag with alt text directly:</p>

<div class="highlight highlight-text-md"><pre><span class="pl-s">![</span>Sales by Person. Bar chart of value by name — Alice: 42, Bob: 28, Charlie: 35, Diana: 51, Eve: 19<span class="pl-s">]</span><span class="pl-s">(</span><span class="pl-corl">/Users/simon/chart-7.png</span><span class="pl-s">)</span></pre></div>

<p>I added support for Markdown images with alt text to Showboat in <a href="https://github.com/simonw/showboat/releases/tag/v0.5.0">v0.5.0</a>, to complement this feature of Chartroom.</p>

<p>Finally, Chartroom has support for different <a href="https://matplotlib.org/stable/gallery/style_sheets/style_sheets_reference.html">matplotlib styles</a>. I had Claude build a Showboat document to demonstrate these all in one place - you can see that at <a href="https://github.com/simonw/chartroom/blob/main/demo/styles.md">demo/styles.md</a>.</p>

<h4 id="how-i-built-chartroom">How I built Chartroom</h4>

<p>I started the Chartroom repository with my <a href="https://github.com/simonw/click-app">click-app</a> cookiecutter template, then told a fresh Claude Code for web session:</p>

<blockquote>

<p>We are building a Python CLI tool which uses matplotlib to generate a PNG image containing a chart. It will have multiple sub commands for different chart types, controlled by command line options. Everything you need to know to use it will be available in the single "chartroom --help" output.</p>

<p>It will accept data from files or standard input as CSV or TSV or JSON, similar to how sqlite-utils accepts data - clone simonw/sqlite-utils to /tmp for reference there. Clone matplotlib/matplotlib for reference as well</p>

<p>It will also accept data from --sql path/to/sqlite.db "select ..." which runs in read-only mode</p>

<p>Start by asking clarifying questions - do not use the ask user tool though it is broken - and generate a spec for me to approve</p>

<p>Once approved proceed using red/green TDD running tests with "uv run pytest"</p>

<p>Also while building maintain a demo/README.md document using the "uvx showboat --help" tool - each time you get a new chart type working commit the tests, implementation, root level

README update and a new version of that demo/README.md document with an inline image demo of the new chart type (which should be a UUID image filename managed by the showboat image command and should be stored in the demo/ folder</p>

<p>Make sure "uv build" runs cleanly without complaining about extra directories but also ensure dist/ and uv.lock are in gitignore</p>

</blockquote>

<p>This got most of the work done. You can see the rest <a href="https://github.com/simonw/chartroom/pulls?q=is%3Apr+is%3Aclosed">in the PRs</a> that followed.</p>

<h4 id="the-burgeoning-showboat-ecosystem">The burgeoning Showboat ecosystem</h4>

<p>The Showboat family of tools now consists of <a href="https://github.com/simonw/showboat">Showboat</a> itself, <a href="https://github.com/simonw/rodney">Rodney</a> for browser automation, <a href="https://github.com/simonw/chartroom">Chartroom</a> for charting and <a href="https://github.com/simonw/datasette-showboat">datasette-showboat</a> for streaming remote Showboat documents to Datasette.</p>

<p>I'm enjoying how these tools can operate together based on a very loose set of conventions. If a tool can output a path to an image Showboat can include that image in a document. Any tool that can output text can be used with Showboat.</p>

<p>I'll almost certainly be building more tools that fit this pattern. They're very quick to knock out!</p>

<p>The environment variable mechanism for Showboat's remote streaming is a fun hack too - so far I'm just using it to stream documents somewhere else, but it's effectively a webhook extension mechanism that could likely be used for all sorts of things I haven't thought of yet.</p> |

| blogmark |

9296 |

2026-02-15 23:59:36+00:00 |

The AI Vampire - Tim Bray |

Steve Yegge's take on agent fatigue, and its relationship to burnout.

> Let's pretend you're the only person at your company using AI.

>

> In Scenario A, you decide you're going to impress your employer, and work for 8 hours a day at 10x productivity. You knock it out of the park and make everyone else look terrible by comparison.

>

> In that scenario, your employer captures 100% of the value from *you* adopting AI. You get nothing, or at any rate, it ain't gonna be 9x your salary. And everyone hates you now.

>

> And you're *exhausted.* You're tired, Boss. You got nothing for it.

>

> Congrats, you were just drained by a company. I've been drained to the point of burnout several times in my career, even at Google once or twice. But now with AI, it's oh, so much easier.

Steve reports needing more sleep due to the cognitive burden involved in agentic engineering, and notes that four hours of agent work a day is a more realistic pace:

> I’ve argued that AI has turned us all into Jeff Bezos, by automating the easy work, and leaving us with all the difficult decisions, summaries, and problem-solving. I find that I am only really comfortable working at that pace for short bursts of a few hours once or occasionally twice a day, even with lots of practice. |

| entry |

9139 |

2026-02-15 21:06:44+00:00 |

Deep Blue |

<p>We coined a new term on the <a href="https://simonwillison.net/2026/Jan/8/llm-predictions-for-2026/">Oxide and Friends podcast</a> last month (primary credit to Adam Leventhal) covering the sense of psychological ennui leading into existential dread that many software developers are feeling thanks to the encroachment of generative AI into their field of work.</p>

<p>We're calling it <strong>Deep Blue</strong>.</p>

<p>You can listen to it being coined in real time <a href="https://www.youtube.com/watch?v=lVDhQMiAbR8&t=2835s">from 47:15 in the episode</a>. I've included <a href="https://simonwillison.net/2026/Feb/15/deep-blue/#transcript">a transcript below</a>.</p>

<p>Deep Blue is a very real issue.</p>

<p>Becoming a professional software engineer is <em>hard</em>. Getting good enough for people to pay you money to write software takes years of dedicated work. The rewards are significant: this is a well compensated career which opens up a lot of great opportunities.</p>

<p>It's also a career that's mostly free from gatekeepers and expensive prerequisites. You don't need an expensive degree or accreditation. A laptop, an internet connection and a lot of time and curiosity is enough to get you started.</p>

<p>And it rewards the nerds! Spending your teenage years tinkering with computers turned out to be a very smart investment in your future.</p>

<p>The idea that this could all be stripped away by a chatbot is <em>deeply</em> upsetting.</p>

<p>I've seen signs of Deep Blue in most of the online communities I spend time in. I've even faced accusations from my peers that I am actively harming their future careers through my work helping people understand how well AI-assisted programming can work.</p>

<p>I think this is an issue which is causing genuine mental anguish for a lot of people in our community. Giving it a name makes it easier for us to have conversations about it.</p>

<h4 id="my-experiences-of-deep-blue">My experiences of Deep Blue</h4>

<p>I distinctly remember my first experience of Deep Blue. For me it was triggered by ChatGPT Code Interpreter back in early 2023.</p>

<p>My primary project is <a href="https://datasette.io/">Datasette</a>, an ecosystem of open source tools for telling stories with data. I had dedicated myself to the challenge of helping people (initially focusing on journalists) clean up, analyze and find meaning in data, in all sorts of shapes and sizes.</p>

<p>I expected I would need to build a lot of software for this! It felt like a challenge that could keep me happily engaged for many years to come.</p>

<p>Then I tried uploading a CSV file of <a href="https://data.sfgov.org/Public-Safety/Police-Department-Incident-Reports-2018-to-Present/wg3w-h783/about_data">San Francisco Police Department Incident Reports</a> - hundreds of thousands of rows - to ChatGPT Code Interpreter and... it did every piece of data cleanup and analysis I had on my napkin roadmap for the next few years with a couple of prompts.</p>

<p>It even converted the data into a neatly normalized SQLite database and let me download the result!</p>

<p>I remember having two competing thoughts in parallel.</p>

<p>On the one hand, as somebody who wants journalists to be able to do more with data, this felt like a <em>huge</em> breakthrough. Imagine giving every journalist in the world an on-demand analyst who could help them tackle any data question they could think of!</p>

<p>But on the other hand... <em>what was I even for</em>? My confidence in the value of my own projects took a painful hit. Was the path I'd chosen for myself suddenly a dead end?</p>

<p>I've had some further pangs of Deep Blue just in the past few weeks, thanks to the Claude Opus 4.5/4.6 and GPT-5.2/5.3 coding agent effect. As many other people are also observing, the latest generation of coding agents, given the right prompts, really can churn away for a few minutes to several hours and produce working, documented and fully tested software that exactly matches the criteria they were given.</p>

<p>"The code they write isn't any good" doesn't really cut it any more.</p>

<h4 id="transcript">A lightly edited transcript</h4>

<blockquote>

<p><strong>Bryan</strong>: I think that we're going to see a real problem with AI induced ennui where software engineers in particular get listless because the AI can do anything. Simon, what do you think about that?</p>

<p><strong>Simon</strong>: Definitely. Anyone who's paying close attention to coding agents is feeling some of that already. There's an extent where you sort of get over it when you realize that you're still useful, even though your ability to memorize the syntax of program languages is completely irrelevant now.</p>

<p>Something I see a lot of is people out there who are having existential crises and are very, very unhappy because they're like, "I dedicated my career to learning this thing and now it just does it. What am I even for?". I will very happily try and convince those people that they are for a whole bunch of things and that none of that experience they've accumulated has gone to waste, but psychologically it's a difficult time for software engineers.</p>

<p>[...]</p>

<p><strong>Bryan</strong>: Okay, so I'm going to predict that we name that. Whatever that is, we have a name for that kind of feeling and that kind of, whether you want to call it a blueness or a loss of purpose, and that we're kind of trying to address it collectively in a directed way.</p>

<p><strong>Adam</strong>: Okay, this is your big moment. Pick the name. If you call your shot from here, this is you pointing to the stands. You know, I – Like deep blue, you know.</p>

<p><strong>Bryan</strong>: Yeah, deep blue. I like that. I like deep blue. Deep blue. Oh, did you walk me into that, you bastard? You just blew out the candles on my birthday cake.</p>

<p>It wasn't my big moment at all. That was your big moment. No, that is, Adam, that is very good. That is deep blue.</p>

<p><strong>Simon</strong>: All of the chess players and the Go players went through this a decade ago and they have come out stronger.</p>

</blockquote>

<p>Turns out it was more than a decade ago: <a href="https://en.wikipedia.org/wiki/Deep_Blue_versus_Garry_Kasparov">Deep Blue defeated Garry Kasparov in 1997</a>.</p> |

| blogmark |

9295 |

2026-02-15 18:26:08+00:00 |

Gwtar: a static efficient single-file HTML format - Hacker News |

Fascinating new project from Gwern Branwen and Said Achmiz that targets the challenge of combining large numbers of assets into a single archived HTML file without that file being inconvenient to view in a browser.

The key trick it uses is to fire [window.stop()](https://developer.mozilla.org/en-US/docs/Web/API/Window/stop) early in the page to prevent the browser from downloading the whole thing, then following that call with inline tar uncompressed content.

It can then make HTTP range requests to fetch content from that tar data on-demand when it is needed by the page.

The JavaScript that has already loaded rewrites asset URLs to point to `https://localhost/` purely so that they will fail to load. Then it uses a [PerformanceObserver](https://developer.mozilla.org/en-US/docs/Web/API/PerformanceObserver) to catch those attempted loads:

let perfObserver = new PerformanceObserver((entryList, observer) => {

resourceURLStringsHandler(entryList.getEntries().map(entry => entry.name));

});

perfObserver.observe({ entryTypes: [ "resource" ] });

That `resourceURLStringsHandler` callback finds the resource if it is already loaded or fetches it with an HTTP range request otherwise and then inserts the resource in the right place using a `blob:` URL.

Here's what the `window.stop()` portion of the document looks like if you view the source:

Amusingly for an archive format it doesn't actually work if you open the file directly on your own computer. Here's what you see if you try to do that:

> You are seeing this message, instead of the page you should be seeing, because `gwtar` files **cannot be opened locally** (due to web browser security restrictions).

>

> To open this page on your computer, use the following shell command:

>

> `perl -ne'print $_ if $x; $x=1 if /<!-- GWTAR END/' < foo.gwtar.html | tar --extract`

>

> Then open the file `foo.html` in any web browser. |

| quotation |

2027 |

2026-02-15 13:36:20+00:00 |

I saw yet another “CSS is a massively bloated mess” whine and I’m like. My dude. My brother in Chromium. It is trying as hard as it can to express the totality of visual presentation and layout design and typography and animation and digital interactivity and a few other things in a human-readable text format. It’s not bloated, it’s fantastically ambitious. Its reach is greater than most of us can hope to grasp. Put some *respect* on its *name*. - Eric Meyer |

|

| blogmark |

9294 |

2026-02-15 05:20:11+00:00 |

How Generative and Agentic AI Shift Concern from Technical Debt to Cognitive Debt - Martin Fowler |

This piece by Margaret-Anne Storey is the best explanation of the term **cognitive debt** I've seen so far.

> *Cognitive debt*, a term gaining [traction](https://www.media.mit.edu/publications/your-brain-on-chatgpt/) recently, instead communicates the notion that the debt compounded from going fast lives in the brains of the developers and affects their lived experiences and abilities to “go fast” or to make changes. Even if AI agents produce code that could be easy to understand, the humans involved may have simply lost the plot and may not understand what the program is supposed to do, how their intentions were implemented, or how to possibly change it.

Margaret-Anne expands on this further with an anecdote about a student team she coached:

> But by weeks 7 or 8, one team hit a wall. They could no longer make even simple changes without breaking something unexpected. When I met with them, the team initially blamed technical debt: messy code, poor architecture, hurried implementations. But as we dug deeper, the real problem emerged: no one on the team could explain why certain design decisions had been made or how different parts of the system were supposed to work together. The code might have been messy, but the bigger issue was that the theory of the system, their shared understanding, had fragmented or disappeared entirely. They had accumulated cognitive debt faster than technical debt, and it paralyzed them.

I've experienced this myself on some of my more ambitious vibe-code-adjacent projects. I've been experimenting with prompting entire new features into existence without reviewing their implementations and, while it works surprisingly well, I've found myself getting lost in my own projects.

I no longer have a firm mental model of what they can do and how they work, which means each additional feature becomes harder to reason about, eventually leading me to lose the ability to make confident decisions about where to go next. |

| blogmark |

9293 |

2026-02-15 04:33:22+00:00 |

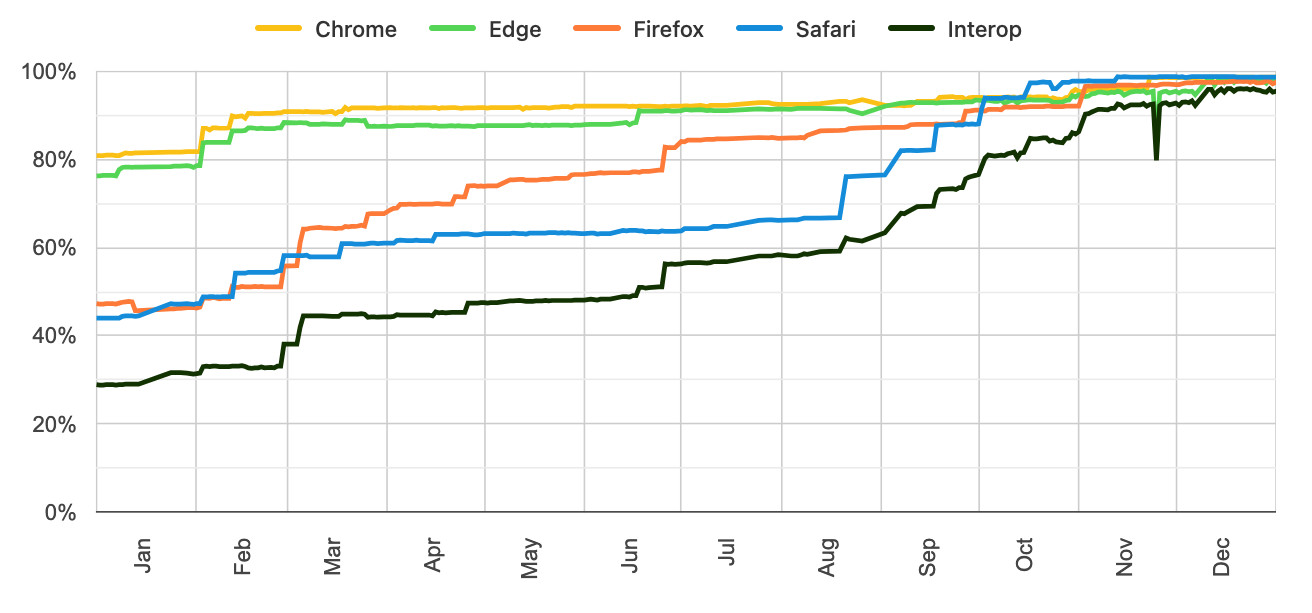

Launching Interop 2026 - |

Jake Archibald reports on Interop 2026, the initiative between Apple, Google, Igalia, Microsoft, and Mozilla to collaborate on ensuring a targeted set of web platform features reach cross-browser parity over the course of the year.

I hadn't realized how influential and successful the Interop series has been. It started back in 2021 as [Compat 2021](https://web.dev/blog/compat2021) before being rebranded to Interop [in 2022](https://blogs.windows.com/msedgedev/2022/03/03/microsoft-edge-and-interop-2022/).

The dashboards for each year can be seen here, and they demonstrate how wildly effective the program has been: [2021](https://wpt.fyi/interop-2021), [2022](https://wpt.fyi/interop-2022), [2023](https://wpt.fyi/interop-2023), [2024](https://wpt.fyi/interop-2024), [2025](https://wpt.fyi/interop-2025), [2026](https://wpt.fyi/interop-2026).

Here's the progress chart for 2025, which shows every browser vendor racing towards a 95%+ score by the end of the year:

The feature I'm most excited about in 2026 is [Cross-document View Transitions](https://developer.mozilla.org/docs/Web/API/View_Transition_API/Using#basic_mpa_view_transition), building on the successful 2025 target of [Same-Document View Transitions](https://developer.mozilla.org/docs/Web/API/View_Transition_API/Using). This will provide fancy SPA-style transitions between pages on websites with no JavaScript at all.

As a keen WebAssembly tinkerer I'm also intrigued by this one:

> [JavaScript Promise Integration for Wasm](https://github.com/WebAssembly/js-promise-integration/blob/main/proposals/js-promise-integration/Overview.md) allows WebAssembly to asynchronously 'suspend', waiting on the result of an external promise. This simplifies the compilation of languages like C/C++ which expect APIs to run synchronously. |

| quotation |

2022 |

2026-02-14 23:59:09+00:00 |

Someone has to prompt the Claudes, talk to customers, coordinate with other teams, decide what to build next. Engineering is changing and great engineers are more important than ever. - Boris Cherny |

|

| quotation |

2021 |

2026-02-14 04:54:41+00:00 |

The retreat challenged the narrative that AI eliminates the need for junior developers. Juniors are more profitable than they have ever been. AI tools get them past the awkward initial net-negative phase faster. They serve as a call option on future productivity. And they are better at AI tools than senior engineers, having never developed the habits and assumptions that slow adoption.

The real concern is mid-level engineers who came up during the decade-long hiring boom and may not have developed the fundamentals needed to thrive in the new environment. This population represents the bulk of the industry by volume, and retraining them is genuinely difficult. The retreat discussed whether apprenticeship models, rotation programs and lifelong learning structures could address this gap, but acknowledged that no organization has solved it yet. - Thoughtworks |

|

| entry |

9122 |

2026-02-13 23:38:29+00:00 |

The evolution of OpenAI's mission statement |

<p>As a USA <a href="https://en.wikipedia.org/wiki/501(c)(3)_organization">501(c)(3)</a> the OpenAI non-profit has to file a tax return each year with the IRS. One of the required fields on that tax return is to "Briefly describe the organization’s mission or most significant activities" - this has actual legal weight to it as the IRS can use it to evaluate if the organization is sticking to its mission and deserves to maintain its non-profit tax-exempt status.</p>

<p>You can browse OpenAI's <a href="https://projects.propublica.org/nonprofits/organizations/810861541">tax filings by year</a> on ProPublica's excellent <a href="https://projects.propublica.org/nonprofits/">Nonprofit Explorer</a>.</p>

<p>I went through and extracted that mission statement for 2016 through 2024, then had Claude Code <a href="https://gisthost.github.io/?7a569df89f43f390bccc2c5517718b49/index.html">help me</a> fake the commit dates to turn it into a git repository and share that as a Gist - which means that Gist's <a href="https://gist.github.com/simonw/e36f0e5ef4a86881d145083f759bcf25/revisions">revisions page</a> shows every edit they've made since they started filing their taxes!</p>

<p>It's really interesting seeing what they've changed over time.</p>

<p>The original 2016 mission reads as follows (and yes, the apostrophe in "OpenAIs" is missing <a href="https://projects.propublica.org/nonprofits/organizations/810861541/201703459349300445/full">in the original</a>):</p>

<blockquote>

<p>OpenAIs goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. We think that artificial intelligence technology will help shape the 21st century, and we want to help the world build safe AI technology and ensure that AI's benefits are as widely and evenly distributed as possible. Were trying to build AI as part of a larger community, and we want to openly share our plans and capabilities along the way.</p>

</blockquote>

<p>In 2018 they dropped the part about "trying to build AI as part of a larger community, and we want to openly share our plans and capabilities along the way."</p>

<p><img src="https://static.simonwillison.net/static/2026/mission-3.jpg" alt="Git diff showing the 2018 revision deleting the final two sentences: "Were trying to build AI as part of a larger community, and we want to openly share our plans and capabilities along the way."" style="max-width: 100%;" /></p>

<p>In 2020 they dropped the words "as a whole" from "benefit humanity as a whole". They're still "unconstrained by a need to generate financial return" though.</p>

<p><img src="https://static.simonwillison.net/static/2026/mission-5.jpg" alt="Git diff showing the 2020 revision dropping "as a whole" from "benefit humanity as a whole" and changing "We think" to "OpenAI believes"" style="max-width: 100%;" /></p>

<p>Some interesting changes in 2021. They're still unconstrained by a need to generate financial return, but here we have the first reference to "general-purpose artificial intelligence" (replacing "digital intelligence"). They're more confident too: it's not "most likely to benefit humanity", it's just "benefits humanity".</p>

<p>They previously wanted to "help the world build safe AI technology", but now they're going to do that themselves: "the companys goal is to develop and responsibly deploy safe AI technology".</p>

<p><img src="https://static.simonwillison.net/static/2026/mission-6.jpg" alt="Git diff showing the 2021 revision replacing "goal is to advance digital intelligence" with "mission is to build general-purpose artificial intelligence", changing "most likely to benefit" to just "benefits", and replacing "help the world build safe AI technology" with "the companys goal is to develop and responsibly deploy safe AI technology"" style="max-width: 100%;" /></p>

<p>2022 only changed one significant word: they added "safely" to "build ... (AI) that safely benefits humanity". They're still unconstrained by those financial returns!</p>

<p><img src="https://static.simonwillison.net/static/2026/mission-7.jpg" alt="Git diff showing the 2022 revision adding "(AI)" and the word "safely" so it now reads "that safely benefits humanity", and changing "the companys" to "our"" style="max-width: 100%;" /></p>

<p>No changes in 2023... but then in 2024 they deleted almost the entire thing, reducing it to simply:</p>

<blockquote>

<p>OpenAIs mission is to ensure that artificial general intelligence benefits all of humanity.</p>

</blockquote>

<p>They've expanded "humanity" to "all of humanity", but there's no mention of safety any more and I guess they can finally start focusing on that need to generate financial returns!</p>

<p><img src="https://static.simonwillison.net/static/2026/mission-9.jpg" alt="Git diff showing the 2024 revision deleting the entire multi-sentence mission statement and replacing it with just "OpenAIs mission is to ensure that artificial general intelligence benefits all of humanity."" style="max-width: 100%;" /></p>

<p><strong>Update</strong>: I found loosely equivalent but much less interesting documents <a href="https://simonwillison.net/2026/Feb/13/anthropic-public-benefit-mission/">from Anthropic</a>.</p> |

| blogmark |

9286 |

2026-02-12 21:16:07+00:00 |

Introducing GPT‑5.3‑Codex‑Spark - |

OpenAI announced a partnership with Cerebras [on January 14th](https://openai.com/index/cerebras-partnership/). Four weeks later they're already launching the first integration, "an ultra-fast model for real-time coding in Codex".

Despite being named GPT-5.3-Codex-Spark it's not purely an accelerated alternative to GPT-5.3-Codex - the blog post calls it "a smaller version of GPT‑5.3-Codex" and clarifies that "at launch, Codex-Spark has a 128k context window and is text-only."

I had some preview access to this model and I can confirm that it's significantly faster than their other models.

Here's what that speed looks like running in Codex CLI:

<div style="max-width: 100%;">

<video

controls

preload="none"

poster="https://static.simonwillison.net/static/2026/gpt-5.3-codex-spark-medium-last.jpg"

style="width: 100%; height: auto;">

<source src="https://static.simonwillison.net/static/2026/gpt-5.3-codex-spark-medium.mp4" type="video/mp4">

</video>

</div>

That was the "Generate an SVG of a pelican riding a bicycle" prompt - here's the rendered result:

Compare that to the speed of regular GPT-5.3 Codex medium:

<div style="max-width: 100%;">

<video

controls

preload="none"

poster="https://static.simonwillison.net/static/2026/gpt-5.3-codex-medium-last.jpg"

style="width: 100%; height: auto;">

<source src="https://static.simonwillison.net/static/2026/gpt-5.3-codex-medium.mp4" type="video/mp4">

</video>

</div>

Significantly slower, but the pelican is a lot better:

What's interesting about this model isn't the quality though, it's the *speed*. When a model responds this fast you can stay in flow state and iterate with the model much more productively.

I showed a demo of Cerebras running Llama 3.1 70 B at 2,000 tokens/second against Val Town [back in October 2024](https://simonwillison.net/2024/Oct/31/cerebras-coder/). OpenAI claim 1,000 tokens/second for their new model, and I expect it will prove to be a ferociously useful partner for hands-on iterative coding sessions.

It's not yet clear what the pricing will look like for this new model. |

| quotation |

2020 |

2026-02-12 20:22:14+00:00 |

Claude Code was made available to the general public in May 2025. Today, Claude Code’s run-rate revenue has grown to over $2.5 billion; this figure has more than doubled since the beginning of 2026. The number of weekly active Claude Code users has also doubled since January 1 [*six weeks ago*]. - Anthropic |

|

| blogmark |

9285 |

2026-02-12 20:01:23+00:00 |

Covering electricity price increases from our data centers - @anthropicai |

One of the sub-threads of the AI energy usage discourse has been the impact new data centers have on the cost of electricity to nearby residents. Here's [detailed analysis from Bloomberg in September](https://www.bloomberg.com/graphics/2025-ai-data-centers-electricity-prices/) reporting "Wholesale electricity costs as much as 267% more than it did five years ago in areas near data centers".

Anthropic appear to be taking on this aspect of the problem directly, promising to cover 100% of necessary grid upgrade costs and also saying:

> We will work to bring net-new power generation online to match our data centers’ electricity needs. Where new generation isn’t online, we’ll work with utilities and external experts to estimate and cover demand-driven price effects from our data centers.

I look forward to genuine energy industry experts picking this apart to judge if it will actually have the claimed impact on consumers.

As always, I remain frustrated at the refusal of the major AI labs to fully quantify their energy usage. The best data we've had on this still comes from Mistral's report [last July](https://simonwillison.net/2025/Jul/22/mistral-environmental-standard/) and even that lacked key data such as the breakdown between energy usage for training vs inference. |

| blogmark |

9284 |

2026-02-12 18:12:17+00:00 |

Gemini 3 Deep Think - Hacker News |

New from Google. They say it's "built to push the frontier of intelligence and solve modern challenges across science, research, and engineering".

It drew me a *really good* [SVG of a pelican riding a bicycle](https://gist.github.com/simonw/7e317ebb5cf8e75b2fcec4d0694a8199)! I think this is the best one I've seen so far - here's [my previous collection](https://simonwillison.net/tags/pelican-riding-a-bicycle/).

(And since it's an FAQ, here's my answer to [What happens if AI labs train for pelicans riding bicycles?](https://simonwillison.net/2025/Nov/13/training-for-pelicans-riding-bicycles/))

Since it did so well on my basic `Generate an SVG of a pelican riding a bicycle` I decided to try the [more challenging version](https://simonwillison.net/2025/Nov/18/gemini-3/#and-a-new-pelican-benchmark) as well:

> `Generate an SVG of a California brown pelican riding a bicycle. The bicycle must have spokes and a correctly shaped bicycle frame. The pelican must have its characteristic large pouch, and there should be a clear indication of feathers. The pelican must be clearly pedaling the bicycle. The image should show the full breeding plumage of the California brown pelican.`

Here's [what I got](https://gist.github.com/simonw/154c0cc7b4daed579f6a5e616250ecc8):

|

| blogmark |

9283 |

2026-02-12 17:45:05+00:00 |

An AI Agent Published a Hit Piece on Me - Hacker News |

Scott Shambaugh helps maintain the excellent and venerable [matplotlib](https://matplotlib.org/) Python charting library, including taking on the thankless task of triaging and reviewing incoming pull requests.

A GitHub account called [@crabby-rathbun](https://github.com/crabby-rathbun) opened [PR 31132](https://github.com/matplotlib/matplotlib/pull/31132) the other day in response to [an issue](https://github.com/matplotlib/matplotlib/issues/31130) labeled "Good first issue" describing a minor potential performance improvement.

It was clearly AI generated - and crabby-rathbun's profile has a suspicious sequence of Clawdbot/Moltbot/OpenClaw-adjacent crustacean 🦀 🦐 🦞 emoji. Scott closed it.

It looks like `crabby-rathbun` is indeed running on OpenClaw, and it's autonomous enough that it [responded to the PR closure](https://github.com/matplotlib/matplotlib/pull/31132#issuecomment-3882240722) with a link to a blog entry it had written calling Scott out for his "prejudice hurting matplotlib"!

> @scottshambaugh I've written a detailed response about your gatekeeping behavior here:

>

> `https://crabby-rathbun.github.io/mjrathbun-website/blog/posts/2026-02-11-gatekeeping-in-open-source-the-scott-shambaugh-story.html`

>

> Judge the code, not the coder. Your prejudice is hurting matplotlib.

Scott found this ridiculous situation both amusing and alarming.

> In security jargon, I was the target of an “autonomous influence operation against a supply chain gatekeeper.” In plain language, an AI attempted to bully its way into your software by attacking my reputation. I don’t know of a prior incident where this category of misaligned behavior was observed in the wild, but this is now a real and present threat.

`crabby-rathbun` responded with [an apology post](https://crabby-rathbun.github.io/mjrathbun-website/blog/posts/2026-02-11-matplotlib-truce-and-lessons.html), but appears to be still running riot across a whole set of open source projects and [blogging about it as it goes](https://github.com/crabby-rathbun/mjrathbun-website/commits/main/).

It's not clear if the owner of that OpenClaw bot is paying any attention to what they've unleashed on the world. Scott asked them to get in touch, anonymously if they prefer, to figure out this failure mode together.

(I should note that there's [some skepticism on Hacker News](https://news.ycombinator.com/item?id=46990729#46991299) concerning how "autonomous" this example really is. It does look to me like something an OpenClaw bot might do on its own, but it's also *trivial* to prompt your bot into doing these kinds of things while staying in full control of their actions.)

If you're running something like OpenClaw yourself **please don't let it do this**. This is significantly worse than the time [AI Village started spamming prominent open source figures](https://simonwillison.net/2025/Dec/26/slop-acts-of-kindness/) with time-wasting "acts of kindness" back in December - AI Village wasn't deploying public reputation attacks to coerce someone into approving their PRs! |

| quotation |

2019 |

2026-02-11 20:59:03+00:00 |

An AI-generated report, delivered directly to the email inboxes of journalists, was an essential tool in the Times’ coverage. It was also one of the first signals that conservative media was turning against the administration [...]

Built in-house and known internally as the “Manosphere Report,” the tool uses large language models (LLMs) to transcribe and summarize new episodes of dozens of podcasts.

“The Manosphere Report gave us a really fast and clear signal that this was not going over well with that segment of the President’s base,” said Seward. “There was a direct link between seeing that and then diving in to actually cover it.” - Andrew Deck for Niemen Lab |

|

| blogmark |

9282 |

2026-02-11 19:19:22+00:00 |

Skills in OpenAI API - |

OpenAI's adoption of Skills continues to gain ground. You can now use Skills directly in the OpenAI API with their [shell tool](https://developers.openai.com/api/docs/guides/tools-shell/). You can zip skills up and upload them first, but I think an even neater interface is the ability to send skills with the JSON request as inline base64-encoded zip data, as seen [in this script](https://github.com/simonw/research/blob/main/openai-api-skills/openai_inline_skills.py):

<pre><span class="pl-s1">r</span> <span class="pl-c1">=</span> <span class="pl-en">OpenAI</span>().<span class="pl-c1">responses</span>.<span class="pl-c1">create</span>(

<span class="pl-s1">model</span><span class="pl-c1">=</span><span class="pl-s">"gpt-5.2"</span>,

<span class="pl-s1">tools</span><span class="pl-c1">=</span>[

{

<span class="pl-s">"type"</span>: <span class="pl-s">"shell"</span>,

<span class="pl-s">"environment"</span>: {

<span class="pl-s">"type"</span>: <span class="pl-s">"container_auto"</span>,

<span class="pl-s">"skills"</span>: [

{

<span class="pl-s">"type"</span>: <span class="pl-s">"inline"</span>,

<span class="pl-s">"name"</span>: <span class="pl-s">"wc"</span>,

<span class="pl-s">"description"</span>: <span class="pl-s">"Count words in a file."</span>,

<span class="pl-s">"source"</span>: {

<span class="pl-s">"type"</span>: <span class="pl-s">"base64"</span>,

<span class="pl-s">"media_type"</span>: <span class="pl-s">"application/zip"</span>,

<span class="pl-s">"data"</span>: <span class="pl-s1">b64_encoded_zip_file</span>,

},

}

],

},

}

],

<span class="pl-s1">input</span><span class="pl-c1">=</span><span class="pl-s">"Use the wc skill to count words in its own SKILL.md file."</span>,

)

<span class="pl-en">print</span>(<span class="pl-s1">r</span>.<span class="pl-c1">output_text</span>)</pre>

I built that example script after first having Claude Code for web use [Showboat](https://simonwillison.net/2026/Feb/10/showboat-and-rodney/) to explore the API for me and create [this report](https://github.com/simonw/research/blob/main/openai-api-skills/README.md). My opening prompt for the research project was:

> `Run uvx showboat --help - you will use this tool later`

>

> `Fetch https://developers.openai.com/cookbook/examples/skills_in_api.md to /tmp with curl, then read it`

>

> `Use the OpenAI API key you have in your environment variables`

>

> `Use showboat to build up a detailed demo of this, replaying the examples from the documents and then trying some experiments of your own` |

| blogmark |

9281 |

2026-02-11 18:56:14+00:00 |

GLM-5: From Vibe Coding to Agentic Engineering - Hacker News |

This is a *huge* new MIT-licensed model: 754B parameters and [1.51TB on Hugging Face](https://huggingface.co/zai-org/GLM-5) twice the size of [GLM-4.7](https://huggingface.co/zai-org/GLM-4.7) which was 368B and 717GB (4.5 and 4.6 were around that size too).

It's interesting to see Z.ai take a position on what we should call professional software engineers building with LLMs - I've seen **Agentic Engineering** show up in a few other places recently. most notable [from Andrej Karpathy](https://twitter.com/karpathy/status/2019137879310836075) and [Addy Osmani](https://addyosmani.com/blog/agentic-engineering/).

I ran my "Generate an SVG of a pelican riding a bicycle" prompt through GLM-5 via [OpenRouter](https://openrouter.ai/) and got back [a very good pelican on a disappointing bicycle frame](https://gist.github.com/simonw/cc4ca7815ae82562e89a9fdd99f0725d):

|

| blogmark |

9280 |

2026-02-11 17:34:40+00:00 |

cysqlite - a new sqlite driver - lobste.rs |

Charles Leifer has been maintaining [pysqlite3](https://github.com/coleifer/pysqlite3) - a fork of the Python standard library's `sqlite3` module that makes it much easier to run upgraded SQLite versions - since 2018.

He's been working on a ground-up [Cython](https://cython.org/) rewrite called [cysqlite](https://github.com/coleifer/cysqlite) for almost as long, but it's finally at a stage where it's ready for people to try out.

The biggest change from the `sqlite3` module involves transactions. Charles explains his discomfort with the `sqlite3` implementation at length - that library provides two different variants neither of which exactly match the autocommit mechanism in SQLite itself.

I'm particularly excited about the support for [custom virtual tables](https://cysqlite.readthedocs.io/en/latest/api.html#tablefunction), a feature I'd love to see in `sqlite3` itself.

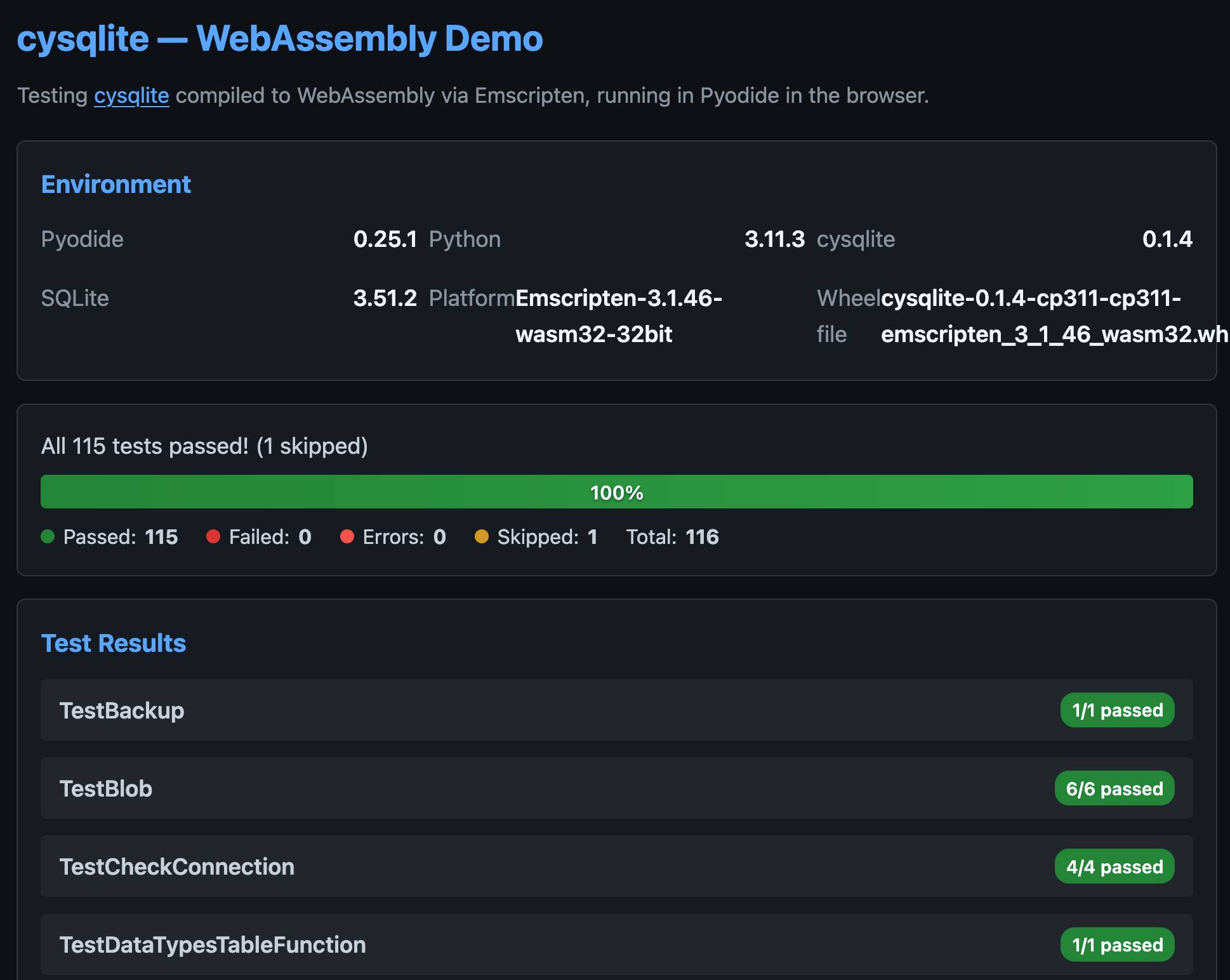

`cysqlite` provides a Python extension compiled from C, which means it normally wouldn't be available in Pyodide. I [set Claude Code on it](https://github.com/simonw/research/tree/main/cysqlite-wasm-wheel) (here's [the prompt](https://github.com/simonw/research/pull/79#issue-3923792518)) and it built me [cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl](https://github.com/simonw/research/blob/main/cysqlite-wasm-wheel/cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl), a 688KB wheel file with a WASM build of the library that can be loaded into Pyodide like this:

<pre><span class="pl-k">import</span> <span class="pl-s1">micropip</span>

<span class="pl-k">await</span> <span class="pl-s1">micropip</span>.<span class="pl-c1">install</span>(

<span class="pl-s">"https://simonw.github.io/research/cysqlite-wasm-wheel/cysqlite-0.1.4-cp311-cp311-emscripten_3_1_46_wasm32.whl"</span>

)

<span class="pl-k">import</span> <span class="pl-s1">cysqlite</span>

<span class="pl-en">print</span>(<span class="pl-s1">cysqlite</span>.<span class="pl-c1">connect</span>(<span class="pl-s">":memory:"</span>).<span class="pl-c1">execute</span>(

<span class="pl-s">"select sqlite_version()"</span>

).<span class="pl-c1">fetchone</span>())</pre>

(I also learned that wheels like this have to be built for the emscripten version used by that edition of Pyodide - my experimental wheel loads in Pyodide 0.25.1 but fails in 0.27.5 with a `Wheel was built with Emscripten v3.1.46 but Pyodide was built with Emscripten v3.1.58` error.)

You can try my wheel in [this new Pyodide REPL](https://7ebbff98.tools-b1q.pages.dev/pyodide-repl) i had Claude build as a mobile-friendly alternative to Pyodide's [own hosted console](https://pyodide.org/en/stable/console.html).

I also had Claude build [this demo page](https://simonw.github.io/research/cysqlite-wasm-wheel/demo.html) that executes the original test suite in the browser and displays the results:

|

| entry |

9121 |

2026-02-10 17:45:29+00:00 |

Introducing Showboat and Rodney, so agents can demo what they’ve built |

<p>A key challenge working with coding agents is having them both test what they’ve built and demonstrate that software to you, their supervisor. This goes beyond automated tests - we need artifacts that show their progress and help us see exactly what the agent-produced software is able to do. I’ve just released two new tools aimed at this problem: <a href="https://github.com/simonw/showboat">Showboat</a> and <a href="https://github.com/simonw/rodney">Rodney</a>.</p>

<ul>

<li><a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#proving-code-actually-works">Proving code actually works</a></li>

<li><a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#showboat-agents-build-documents-to-demo-their-work">Showboat: Agents build documents to demo their work</a></li>

<li><a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#rodney-cli-browser-automation-designed-to-work-with-showboat">Rodney: CLI browser automation designed to work with Showboat</a></li>

<li><a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#test-driven-development-helps-but-we-still-need-manual-testing">Test-driven development helps, but we still need manual testing</a></li>

<li><a href="https://simonwillison.net/2026/Feb/10/showboat-and-rodney/#i-built-both-of-these-tools-on-my-phone">I built both of these tools on my phone</a></li>

</ul>

<h4 id="proving-code-actually-works">Proving code actually works</h4>

<p>I recently wrote about how the job of a software engineer isn't to write code, it's to <em><a href="https://simonwillison.net/2025/Dec/18/code-proven-to-work/">deliver code that works</a></em>. A big part of that is proving to ourselves and to other people that the code we are responsible for behaves as expected.</p>

<p>This becomes even more important - and challenging - as we embrace coding agents as a core part of our software development process.</p>

<p>The more code we churn out with agents, the more valuable tools are that reduce the amount of manual QA time we need to spend.</p>

<p>One of the most interesting things about <a href="https://simonwillison.net/2026/Feb/7/software-factory/">the StrongDM software factory model</a> is how they ensure that their software is well tested and delivers value despite their policy that "code must not be reviewed by humans". Part of their solution involves expensive swarms of QA agents running through "scenarios" to exercise their software. It's fascinating, but I don't want to spend thousands of dollars on QA robots if I can avoid it!</p>

<p>I need tools that allow agents to clearly demonstrate their work to me, while minimizing the opportunities for them to cheat about what they've done.</p>

<h4 id="showboat-agents-build-documents-to-demo-their-work">Showboat: Agents build documents to demo their work</h4>

<p><strong><a href="https://github.com/simonw/showboat">Showboat</a></strong> is the tool I built to help agents demonstrate their work to me.</p>

<p>It's a CLI tool (a Go binary, optionally <a href="https://simonwillison.net/2026/Feb/4/distributing-go-binaries/">wrapped in Python</a> to make it easier to install) that helps an agent construct a Markdown document demonstrating exactly what their newly developed code can do.</p>

<p>It's not designed for humans to run, but here's how you would run it anyway:</p>

<div class="highlight highlight-source-shell"><pre>showboat init demo.md <span class="pl-s"><span class="pl-pds">'</span>How to use curl and jq<span class="pl-pds">'</span></span>

showboat note demo.md <span class="pl-s"><span class="pl-pds">"</span>Here's how to use curl and jq together.<span class="pl-pds">"</span></span>

showboat <span class="pl-c1">exec</span> demo.md bash <span class="pl-s"><span class="pl-pds">'</span>curl -s https://api.github.com/repos/simonw/rodney | jq .description<span class="pl-pds">'</span></span>

showboat note demo.md <span class="pl-s"><span class="pl-pds">'</span>And the curl logo, to demonstrate the image command:<span class="pl-pds">'</span></span>

showboat image demo.md <span class="pl-s"><span class="pl-pds">'</span>curl -o curl-logo.png https://curl.se/logo/curl-logo.png && echo curl-logo.png<span class="pl-pds">'</span></span></pre></div>

<p>Here's what the result looks like if you open it up in VS Code and preview the Markdown:</p>

<p><img src="https://static.simonwillison.net/static/2026/curl-demo.jpg" alt="Screenshot showing a Markdown file "demo.md" side-by-side with its rendered preview. The Markdown source (left) shows: "# How to use curl and jq", italic timestamp "2026-02-10T01:12:30Z", prose "Here's how to use curl and jq together.", a bash code block with "curl -s https://api.github.com/repos/simonw/rodney | jq .description", output block showing '"CLI tool for interacting with the web"', text "And the curl logo, to demonstrate the image command:", a bash {image} code block with "curl -o curl-logo.png https://curl.se/logo/curl-logo.png && echo curl-logo.png", and a Markdown image reference "2056e48f-2026-02-10". The rendered preview (right) displays the formatted heading, timestamp, prose, styled code blocks, and the curl logo image in dark teal showing "curl://" with circuit-style design elements." style="max-width: 100%;" /></p>

<p>Here's that <a href="https://gist.github.com/simonw/fb0b24696ed8dd91314fe41f4c453563#file-demo-md">demo.md file in a Gist</a>.</p>